* implement config and model building blocks * refactor model architechture * update model outputs * update init param to include use_fov_model * update param name in config * fix hidden_states and attentions outputs for fov * sort config * complete minor todos * update patching * update config for encoder * fix config * use correct defaults in config * update merge for compatibility with different image size * restructure encoder for custom configuration * make fov model compatible with custom config * replace word "decoder" with "fusion" * weight conversion script * fix fov squeeze * update conversion script (without test) * upload ruff image processing * create fast image processing * use torch interpolation for image processing * complete post_process_depth_estimation * config: fix imports and sort args * apply inference in weight conversion * use mllama script instead for weight conversion * clean weight conversion script * add depth-pro status in other files * fill docstring in config * formatting * more formatting * formatting with ruff * formatting with style * fix copied classes * add examples; update weight convert script * fix using check_table.py and isort * fix config docstring * add depth pro to sdpa docs * undo unintentional changes in configuration_gemma.py * minor fixes * test image processing * fixes and tests * more fixes * use output states from image_encoder instead * Revert "use output states from image_encoder instead" This reverts commit2408ec54e4. * make embeddings dynamic * reshape output hidden states and attentions as part of computation graph * fix ruff formating * fix docstring failure * use num_fov_head_layers in tests * update doc * check consistency with config * ruff formatting * update test case * fix ruff formatting * add tests for fov * use interpolation in postprocess * run and fix slow tests locally * use scaled_images_features for image and fov encoder * return fused_hidden_states in fusion stage * fix example * fix ruff * fix copyright license for all files * add __all__ for each file * minor fixes - fix download spell - add push_to_hub option - fix Optional type hinting - apply single loop for DepthProImageProcessor.preprocess * return list in post_process_depth_estimation * minor fixes - capitalize start of docstring - use ignore copy - fix examples - move docstring templates and custom output classes to top - remove "-> None" typehinting from __init__ - type hinting for forward passes - fix docstrings for custom output classes * fix "ruff check" * update upsample and projection * major changes: (image size and merge optimization) - add support for images of any size - optimize merge operation - remove image_size from config - use full names instead of B, C, H, W - remove interpolation from fusion stage - add interpolation after merge - move validations to config - update integration test - add type hints for functions * fix push_to_hub option in weights conversion * remove image_size in weights conversion * major changes in the architecture - remove all DepthProViT modules and support different backbones using the AutoModel API - set default use_fov_model to False - validate parameters in configuration - update interpolate function: use "nearest" for faster computation - update reshape_feature function: remove all special tokens, possible from different backbones - update merge function: use padding from config instead of merge_out_size - remove patch_to_batch and batch_to_patch conversions for now - calculate out_size dynamically in the encoder - leave head_mask calculation to the backbone - fix bugs with merge - add more comments - update tests * placeholder for unused config attributes * improve docs amid review * minor change in docs * further optimize merge * fix formatting * remove unused patch/batch convertion functions * use original F.interpolate * improve function naming * minor chages - use torch_int instead of int - use proper for newly initialized tensors - use user provided return_dict for patch_encoder - use if-else block instead in self.use_fov_model * rearchitect upsample block for improved modularity * update upsample keys in weight conversion * improve padding in merge_patches * use double-loop for merge * update comments * create feature_extractor, reduce some forward code * introduce config.use_mask_token in dinov2 * minor fixes * minor fixes for onnx * update __init__ to latest format * remove DepthProConfig.to_dict() * major changes in backbone * update config in weight conversion * formatting * converted model is fp32 * improve naming and docs for feature_extractor->reconstruct_feature_maps * minor fixes; amid review * create intermediate vars in func call * use torch.testing.assert_close * use ModuleList instead of Sequential and ModuleDict * update docs * include fov in integraiton tests * update docs * improve initialization of convolution layers * fix unused fov keys * update tests * ruff format * fix test, amid kaimming initialization * add depthpro to toctree * add residual layer to _no_split_modules * architecture rework * Update src/transformers/models/depth_pro/image_processing_depth_pro.py Co-authored-by: Pavel Iakubovskii <qubvel@gmail.com> * Update src/transformers/models/depth_pro/image_processing_depth_pro_fast.py Co-authored-by: Pavel Iakubovskii <qubvel@gmail.com> * update docs * improve merge_patches * use flatten with fov_output * ruff formatting * update resources section in docs Co-authored-by: Pavel Iakubovskii <qubvel@gmail.com> * fix typo "final_kernal_size" Co-authored-by: Pavel Iakubovskii <qubvel@gmail.com> * fix output typehint for DepthProDepthEstimator Co-authored-by: Pavel Iakubovskii <qubvel@gmail.com> * residual operation in 2 steps Co-authored-by: Pavel Iakubovskii <qubvel@gmail.com> * use image_size instead of global patch_size in interpolation * replace all Sequential with ModuleList * update fov * update heads * fix and update conversion script for heads * ruff formatting * remove float32 conversion * use "Fov" instead of "FOV" in class names * use "Fov" instead of "FOV" in config docs * remove prune_heads * update fusion stage * use device in examples * update processor * ruff fixes * add do_rescale in image_processor_dict * skip test: test_fast_is_faster_than_slow * ruff formatting * DepthProImageProcessorFast in other files * revert antialias removal * add antialias in BaseImageProcessorFast * Revert "revert antialias removal" This reverts commit5caa0bd8f9. * Revert "add antialias in BaseImageProcessorFast" This reverts commit3ae1134780. * update processor for grouping and antialias * try test_fast_is_faster_than_slow without "skip" or "flanky" * update checkpoint * update checkpoint * use @is_flanky for processor test * update checkpoint to "apple/DepthPro-hf" --------- Co-authored-by: Pavel Iakubovskii <qubvel@gmail.com>

36 KiB

GPU inference

GPUs are the standard choice of hardware for machine learning, unlike CPUs, because they are optimized for memory bandwidth and parallelism. To keep up with the larger sizes of modern models or to run these large models on existing and older hardware, there are several optimizations you can use to speed up GPU inference. In this guide, you'll learn how to use FlashAttention-2 (a more memory-efficient attention mechanism), BetterTransformer (a PyTorch native fastpath execution), and bitsandbytes to quantize your model to a lower precision. Finally, learn how to use 🤗 Optimum to accelerate inference with ONNX Runtime on Nvidia and AMD GPUs.

The majority of the optimizations described here also apply to multi-GPU setups!

FlashAttention-2

FlashAttention-2 is experimental and may change considerably in future versions.

FlashAttention-2 is a faster and more efficient implementation of the standard attention mechanism that can significantly speedup inference by:

- additionally parallelizing the attention computation over sequence length

- partitioning the work between GPU threads to reduce communication and shared memory reads/writes between them

FlashAttention-2 is currently supported for the following architectures:

- Aria

- Bark

- Bamba

- Bart

- Chameleon

- CLIP

- Cohere

- Cohere2

- GLM

- Dbrx

- DiffLlama

- DistilBert

- Emu3

- Gemma

- Gemma2

- GotOcr2

- GPT2

- GPTBigCode

- GPTNeo

- GPTNeoX

- GPT-J

- Granite

- GraniteMoe

- Idefics2

- Idefics3

- Falcon

- JetMoe

- Jamba

- Llama

- Llava

- Llava-NeXT

- Llava-NeXT-Video

- LLaVA-Onevision

- Moonshine

- Mimi

- VipLlava

- VideoLlava

- M2M100

- MBart

- Mistral

- Mixtral

- ModernBert

- Moshi

- Musicgen

- MusicGen Melody

- Nemotron

- NLLB

- OLMo

- OLMo2

- OLMoE

- OPT

- PaliGemma

- Phi

- Phi3

- PhiMoE

- StableLm

- Starcoder2

- Qwen2

- Qwen2Audio

- Qwen2MoE

- Qwen2VL

- Qwen2.5VL

- RAG

- SpeechEncoderDecoder

- VisionEncoderDecoder

- VisionTextDualEncoder

- Whisper

- Wav2Vec2

- Hubert

- data2vec_audio

- Sew

- SigLIP

- UniSpeech

- unispeech_sat

- helium

- Zamba2

You can request to add FlashAttention-2 support for another model by opening a GitHub Issue or Pull Request.

Before you begin, make sure you have FlashAttention-2 installed.

pip install flash-attn --no-build-isolation

We strongly suggest referring to the detailed installation instructions to learn more about supported hardware and data types!

FlashAttention-2 is also supported on AMD GPUs and current support is limited to Instinct MI210, Instinct MI250 and Instinct MI300. We strongly suggest using this Dockerfile to use FlashAttention-2 on AMD GPUs.

To enable FlashAttention-2, pass the argument attn_implementation="flash_attention_2" to [~AutoModelForCausalLM.from_pretrained]:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, LlamaForCausalLM

model_id = "tiiuae/falcon-7b"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

attn_implementation="flash_attention_2",

)

FlashAttention-2 can only be used when the model's dtype is fp16 or bf16. Make sure to cast your model to the appropriate dtype and load them on a supported device before using FlashAttention-2.

You can also set use_flash_attention_2=True to enable FlashAttention-2 but it is deprecated in favor of attn_implementation="flash_attention_2".

FlashAttention-2 can be combined with other optimization techniques like quantization to further speedup inference. For example, you can combine FlashAttention-2 with 8-bit or 4-bit quantization:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, LlamaForCausalLM

model_id = "tiiuae/falcon-7b"

tokenizer = AutoTokenizer.from_pretrained(model_id)

# load in 8bit

model = AutoModelForCausalLM.from_pretrained(

model_id,

load_in_8bit=True,

attn_implementation="flash_attention_2",

)

# load in 4bit

model = AutoModelForCausalLM.from_pretrained(

model_id,

load_in_4bit=True,

attn_implementation="flash_attention_2",

)

Expected speedups

You can benefit from considerable speedups for inference, especially for inputs with long sequences. However, since FlashAttention-2 does not support computing attention scores with padding tokens, you must manually pad/unpad the attention scores for batched inference when the sequence contains padding tokens. This leads to a significant slowdown for batched generations with padding tokens.

To overcome this, you should use FlashAttention-2 without padding tokens in the sequence during training (by packing a dataset or concatenating sequences until reaching the maximum sequence length).

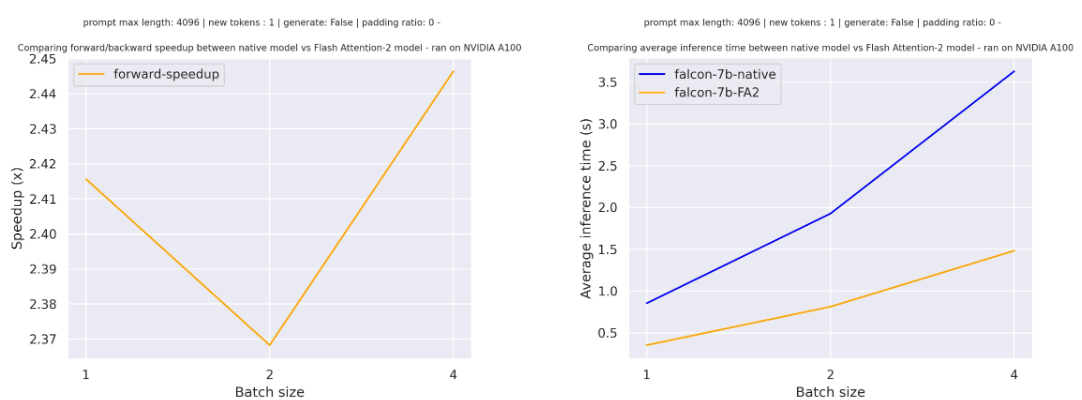

For a single forward pass on tiiuae/falcon-7b with a sequence length of 4096 and various batch sizes without padding tokens, the expected speedup is:

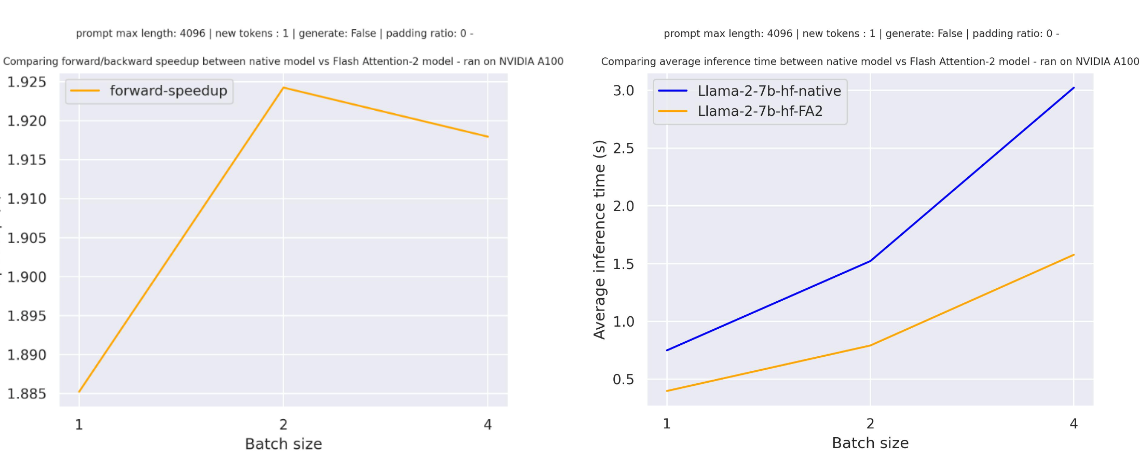

For a single forward pass on meta-llama/Llama-7b-hf with a sequence length of 4096 and various batch sizes without padding tokens, the expected speedup is:

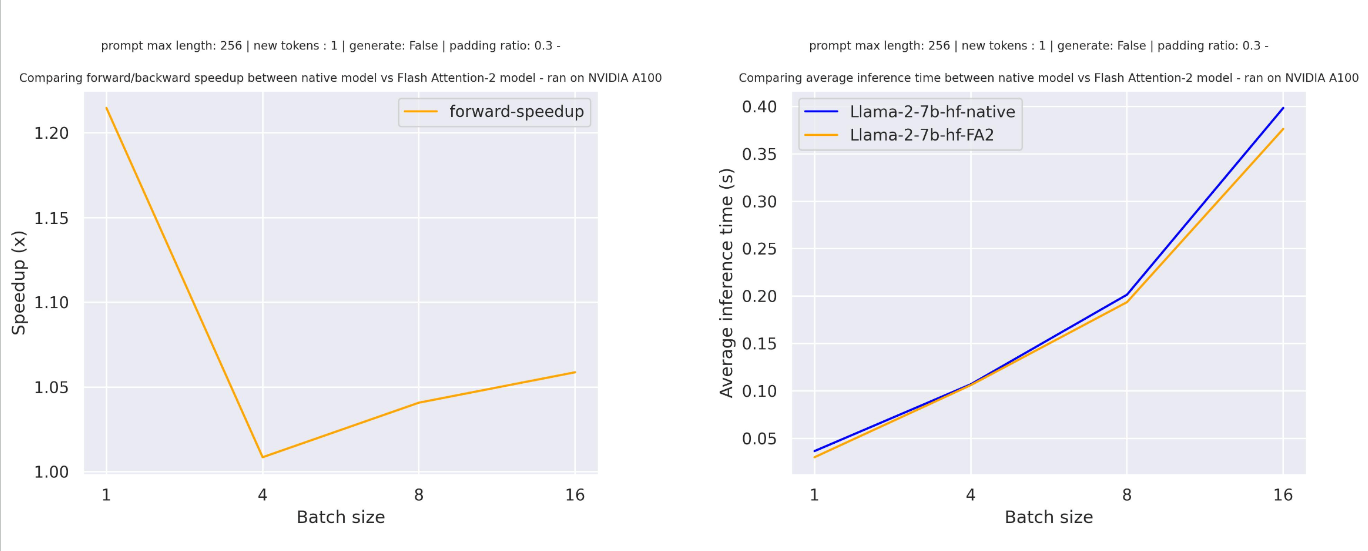

For sequences with padding tokens (generating with padding tokens), you need to unpad/pad the input sequences to correctly compute the attention scores. With a relatively small sequence length, a single forward pass creates overhead leading to a small speedup (in the example below, 30% of the input is filled with padding tokens):

But for larger sequence lengths, you can expect even more speedup benefits:

FlashAttention is more memory efficient, meaning you can train on much larger sequence lengths without running into out-of-memory issues. You can potentially reduce memory usage up to 20x for larger sequence lengths. Take a look at the flash-attention repository for more details.

PyTorch scaled dot product attention

PyTorch's torch.nn.functional.scaled_dot_product_attention (SDPA) can also call FlashAttention and memory-efficient attention kernels under the hood. SDPA support is currently being added natively in Transformers and is used by default for torch>=2.1.1 when an implementation is available. You may also set attn_implementation="sdpa" in from_pretrained() to explicitly request SDPA to be used.

For now, Transformers supports SDPA inference and training for the following architectures:

- Albert

- Aria

- Audio Spectrogram Transformer

- Bamba

- Bart

- Beit

- Bert

- BioGpt

- CamemBERT

- Chameleon

- CLIP

- GLM

- Cohere

- Cohere2

- data2vec_audio

- data2vec_vision

- Dbrx

- DeiT

- DepthPro

- DiffLlama

- Dinov2

- Dinov2_with_registers

- DistilBert

- Dpr

- EncoderDecoder

- Emu3

- Falcon

- Gemma

- Gemma2

- GotOcr2

- Granite

- GPT2

- GPTBigCode

- GPTNeoX

- Hubert

- Idefics

- Idefics2

- Idefics3

- I-JEPA

- GraniteMoe

- JetMoe

- Jamba

- Llama

- Llava

- Llava-NeXT

- Llava-NeXT-Video

- LLaVA-Onevision

- M2M100

- Moonshine

- Mimi

- Mistral

- Mllama

- Mixtral

- ModernBert

- Moshi

- Musicgen

- MusicGen Melody

- NLLB

- OLMo

- OLMo2

- OLMoE

- OPT

- PaliGemma

- Phi

- Phi3

- PhiMoE

- Idefics

- mBart

- Moonshine

- Mistral

- Mixtral

- StableLm

- Starcoder2

- Qwen2

- Qwen2Audio

- Qwen2MoE

- Qwen2.5VL

- RoBERTa

- Sew

- SigLIP

- StableLm

- Starcoder2

- UniSpeech

- unispeech_sat

- RoBERTa

- Qwen2VL

- Musicgen

- MusicGen Melody

- Nemotron

- SpeechEncoderDecoder

- VideoLlava

- VipLlava

- VisionEncoderDecoder

- ViT

- ViTHybrid

- ViTMAE

- ViTMSN

- VisionTextDualEncoder

- VideoMAE

- ViViT

- wav2vec2

- Whisper

- XLM-RoBERTa

- XLM-RoBERTa-XL

- YOLOS

- helium

- Zamba2

FlashAttention can only be used for models with the fp16 or bf16 torch type, so make sure to cast your model to the appropriate type first. The memory-efficient attention backend is able to handle fp32 models.

SDPA does not support certain sets of attention parameters, such as head_mask and output_attentions=True.

In that case, you should see a warning message and we will fall back to the (slower) eager implementation.

By default, SDPA selects the most performant kernel available but you can check whether a backend is available in a given setting (hardware, problem size) with torch.nn.attention.sdpa_kernel as a context manager:

import torch

+ from torch.nn.attention import SDPBackend, sdpa_kernel

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("facebook/opt-350m")

model = AutoModelForCausalLM.from_pretrained("facebook/opt-350m", torch_dtype=torch.float16).to("cuda")

input_text = "Hello my dog is cute and"

inputs = tokenizer(input_text, return_tensors="pt").to(model.device)

+ with sdpa_kernel(SDPBackend.FLASH_ATTENTION):

outputs = model.generate(**inputs)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

If you see a bug with the traceback below, try using the nightly version of PyTorch which may have broader coverage for FlashAttention:

RuntimeError: No available kernel. Aborting execution.

# install PyTorch nightly

pip3 install -U --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/cu118

BetterTransformer

Some BetterTransformer features are being upstreamed to Transformers with default support for native torch.nn.scaled_dot_product_attention. BetterTransformer still has a wider coverage than the Transformers SDPA integration, but you can expect more and more architectures to natively support SDPA in Transformers.

Check out our benchmarks with BetterTransformer and scaled dot product attention in the Out of the box acceleration and memory savings of 🤗 decoder models with PyTorch 2.0 and learn more about the fastpath execution in the BetterTransformer blog post.

BetterTransformer accelerates inference with its fastpath (native PyTorch specialized implementation of Transformer functions) execution. The two optimizations in the fastpath execution are:

- fusion, which combines multiple sequential operations into a single "kernel" to reduce the number of computation steps

- skipping the inherent sparsity of padding tokens to avoid unnecessary computation with nested tensors

BetterTransformer also converts all attention operations to use the more memory-efficient scaled dot product attention (SDPA), and it calls optimized kernels like FlashAttention under the hood.

Before you start, make sure you have 🤗 Optimum installed.

Then you can enable BetterTransformer with the [PreTrainedModel.to_bettertransformer] method:

model = model.to_bettertransformer()

You can return the original Transformers model with the [~PreTrainedModel.reverse_bettertransformer] method. You should use this before saving your model to use the canonical Transformers modeling:

model = model.reverse_bettertransformer()

model.save_pretrained("saved_model")

bitsandbytes

bitsandbytes is a quantization library that includes support for 4-bit and 8-bit quantization. Quantization reduces your model size compared to its native full precision version, making it easier to fit large models onto GPUs with limited memory.

Make sure you have bitsandbytes and 🤗 Accelerate installed:

# these versions support 8-bit and 4-bit

pip install bitsandbytes>=0.39.0 accelerate>=0.20.0

# install Transformers

pip install transformers

4-bit

To load a model in 4-bit for inference, use the load_in_4bit parameter. The device_map parameter is optional, but we recommend setting it to "auto" to allow 🤗 Accelerate to automatically and efficiently allocate the model given the available resources in the environment.

from transformers import AutoModelForCausalLM

model_name = "bigscience/bloom-1b7"

model_4bit = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype="auto", device_map="auto", load_in_4bit=True)

To load a model in 4-bit for inference with multiple GPUs, you can control how much GPU RAM you want to allocate to each GPU. For example, to distribute 2GB of memory to the first GPU and 5GB of memory to the second GPU:

max_memory_mapping = {0: "2GB", 1: "5GB"}

model_name = "bigscience/bloom-3b"

model_4bit = AutoModelForCausalLM.from_pretrained(

model_name, torch_dtype="auto", device_map="auto", load_in_4bit=True, max_memory=max_memory_mapping

)

8-bit

If you're curious and interested in learning more about the concepts underlying 8-bit quantization, read the Gentle Introduction to 8-bit Matrix Multiplication for transformers at scale using Hugging Face Transformers, Accelerate and bitsandbytes blog post.

To load a model in 8-bit for inference, use the load_in_8bit parameter. The device_map parameter is optional, but we recommend setting it to "auto" to allow 🤗 Accelerate to automatically and efficiently allocate the model given the available resources in the environment:

from transformers import AutoModelForCausalLM, BitsAndBytesConfig

model_name = "bigscience/bloom-1b7"

model_8bit = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype="auto", quantization_config=BitsAndBytesConfig(load_in_8bit=True))

If you're loading a model in 8-bit for text generation, you should use the [~transformers.GenerationMixin.generate] method instead of the [Pipeline] function which is not optimized for 8-bit models and will be slower. Some sampling strategies, like nucleus sampling, are also not supported by the [Pipeline] for 8-bit models. You should also place all inputs on the same device as the model:

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig

model_name = "bigscience/bloom-1b7"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model_8bit = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype="auto", quantization_config=BitsAndBytesConfig(load_in_8bit=True))

prompt = "Hello, my llama is cute"

inputs = tokenizer(prompt, return_tensors="pt").to(model_8bit.device)

generated_ids = model_8bit.generate(**inputs)

outputs = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)

To load a model in 8-bit for inference with multiple GPUs, you can control how much GPU RAM you want to allocate to each GPU. For example, to distribute 2GB of memory to the first GPU and 5GB of memory to the second GPU:

max_memory_mapping = {0: "2GB", 1: "5GB"}

model_name = "bigscience/bloom-3b"

model_8bit = AutoModelForCausalLM.from_pretrained(

model_name, torch_dtype="auto", device_map="auto", load_in_8bit=True, max_memory=max_memory_mapping

)

Feel free to try running a 11 billion parameter T5 model or the 3 billion parameter BLOOM model for inference on Google Colab's free tier GPUs!

🤗 Optimum

Learn more details about using ORT with 🤗 Optimum in the Accelerated inference on NVIDIA GPUs and Accelerated inference on AMD GPUs guides. This section only provides a brief and simple example.

ONNX Runtime (ORT) is a model accelerator that supports accelerated inference on Nvidia GPUs, and AMD GPUs that use ROCm stack. ORT uses optimization techniques like fusing common operations into a single node and constant folding to reduce the number of computations performed and speedup inference. ORT also places the most computationally intensive operations on the GPU and the rest on the CPU to intelligently distribute the workload between the two devices.

ORT is supported by 🤗 Optimum which can be used in 🤗 Transformers. You'll need to use an [~optimum.onnxruntime.ORTModel] for the task you're solving, and specify the provider parameter which can be set to either CUDAExecutionProvider, ROCMExecutionProvider or TensorrtExecutionProvider. If you want to load a model that was not yet exported to ONNX, you can set export=True to convert your model on-the-fly to the ONNX format:

from optimum.onnxruntime import ORTModelForSequenceClassification

ort_model = ORTModelForSequenceClassification.from_pretrained(

"distilbert/distilbert-base-uncased-finetuned-sst-2-english",

export=True,

provider="CUDAExecutionProvider",

)

Now you're free to use the model for inference:

from optimum.pipelines import pipeline

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("distilbert/distilbert-base-uncased-finetuned-sst-2-english")

pipeline = pipeline(task="text-classification", model=ort_model, tokenizer=tokenizer, device="cuda:0")

result = pipeline("Both the music and visual were astounding, not to mention the actors performance.")

Combine optimizations

It is often possible to combine several of the optimization techniques described above to get the best inference performance possible for your model. For example, you can load a model in 4-bit, and then enable BetterTransformer with FlashAttention:

import torch

from torch.nn.attention import SDPBackend, sdpa_kernel

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig

# load model in 4-bit

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_compute_dtype=torch.float16

)

tokenizer = AutoTokenizer.from_pretrained("facebook/opt-350m")

model = AutoModelForCausalLM.from_pretrained("facebook/opt-350m", torch_dtype="auto", quantization_config=quantization_config)

input_text = "Hello my dog is cute and"

inputs = tokenizer(input_text, return_tensors="pt").to(model.device)

# enable FlashAttention

with sdpa_kernel(SDPBackend.FLASH_ATTENTION):

outputs = model.generate(**inputs)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))