* toctree * not-doctested.txt * collapse sections * feedback * update * rewrite get started sections * fixes * fix * loading models * fix * customize models * share * fix link * contribute part 1 * contribute pt 2 * fix toctree * tokenization pt 1 * Add new model (#32615) * v1 - working version * fix * fix * fix * fix * rename to correct name * fix title * fixup * rename files * fix * add copied from on tests * rename to `FalconMamba` everywhere and fix bugs * fix quantization + accelerate * fix copies * add `torch.compile` support * fix tests * fix tests and add slow tests * copies on config * merge the latest changes * fix tests * add few lines about instruct * Apply suggestions from code review Co-authored-by: Arthur <48595927+ArthurZucker@users.noreply.github.com> * fix * fix tests --------- Co-authored-by: Arthur <48595927+ArthurZucker@users.noreply.github.com> * "to be not" -> "not to be" (#32636) * "to be not" -> "not to be" * Update sam.md * Update trainer.py * Update modeling_utils.py * Update test_modeling_utils.py * Update test_modeling_utils.py * fix hfoption tag * tokenization pt. 2 * image processor * fix toctree * backbones * feature extractor * fix file name * processor * update not-doctested * update * make style * fix toctree * revision * make fixup * fix toctree * fix * make style * fix hfoption tag * pipeline * pipeline gradio * pipeline web server * add pipeline * fix toctree * not-doctested * prompting * llm optims * fix toctree * fixes * cache * text generation * fix * chat pipeline * chat stuff * xla * torch.compile * cpu inference * toctree * gpu inference * agents and tools * gguf/tiktoken * finetune * toctree * trainer * trainer pt 2 * optims * optimizers * accelerate * parallelism * fsdp * update * distributed cpu * hardware training * gpu training * gpu training 2 * peft * distrib debug * deepspeed 1 * deepspeed 2 * chat toctree * quant pt 1 * quant pt 2 * fix toctree * fix * fix * quant pt 3 * quant pt 4 * serialization * torchscript * scripts * tpu * review * model addition timeline * modular * more reviews * reviews * fix toctree * reviews reviews * continue reviews * more reviews * modular transformers * more review * zamba2 * fix * all frameworks * pytorch * supported model frameworks * flashattention * rm check_table * not-doctested.txt * rm check_support_list.py * feedback * updates/feedback * review * feedback * fix * update * feedback * updates * update --------- Co-authored-by: Younes Belkada <49240599+younesbelkada@users.noreply.github.com> Co-authored-by: Arthur <48595927+ArthurZucker@users.noreply.github.com> Co-authored-by: Quentin Gallouédec <45557362+qgallouedec@users.noreply.github.com>

7.6 KiB

SAM

Overview

SAM (Segment Anything Model) was proposed in Segment Anything by Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick.

The model can be used to predict segmentation masks of any object of interest given an input image.

The abstract from the paper is the following:

We introduce the Segment Anything (SA) project: a new task, model, and dataset for image segmentation. Using our efficient model in a data collection loop, we built the largest segmentation dataset to date (by far), with over 1 billion masks on 11M licensed and privacy respecting images. The model is designed and trained to be promptable, so it can transfer zero-shot to new image distributions and tasks. We evaluate its capabilities on numerous tasks and find that its zero-shot performance is impressive -- often competitive with or even superior to prior fully supervised results. We are releasing the Segment Anything Model (SAM) and corresponding dataset (SA-1B) of 1B masks and 11M images at https://segment-anything.com to foster research into foundation models for computer vision.

Tips:

- The model predicts binary masks that states the presence or not of the object of interest given an image.

- The model predicts much better results if input 2D points and/or input bounding boxes are provided

- You can prompt multiple points for the same image, and predict a single mask.

- Fine-tuning the model is not supported yet

- According to the paper, textual input should be also supported. However, at this time of writing this seems not to be supported according to the official repository.

This model was contributed by ybelkada and ArthurZ. The original code can be found here.

Below is an example on how to run mask generation given an image and a 2D point:

import torch

from PIL import Image

import requests

from transformers import SamModel, SamProcessor

device = "cuda" if torch.cuda.is_available() else "cpu"

model = SamModel.from_pretrained("facebook/sam-vit-huge").to(device)

processor = SamProcessor.from_pretrained("facebook/sam-vit-huge")

img_url = "https://huggingface.co/ybelkada/segment-anything/resolve/main/assets/car.png"

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

input_points = [[[450, 600]]] # 2D location of a window in the image

inputs = processor(raw_image, input_points=input_points, return_tensors="pt").to(device)

with torch.no_grad():

outputs = model(**inputs)

masks = processor.image_processor.post_process_masks(

outputs.pred_masks.cpu(), inputs["original_sizes"].cpu(), inputs["reshaped_input_sizes"].cpu()

)

scores = outputs.iou_scores

You can also process your own masks alongside the input images in the processor to be passed to the model.

import torch

from PIL import Image

import requests

from transformers import SamModel, SamProcessor

device = "cuda" if torch.cuda.is_available() else "cpu"

model = SamModel.from_pretrained("facebook/sam-vit-huge").to(device)

processor = SamProcessor.from_pretrained("facebook/sam-vit-huge")

img_url = "https://huggingface.co/ybelkada/segment-anything/resolve/main/assets/car.png"

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

mask_url = "https://huggingface.co/ybelkada/segment-anything/resolve/main/assets/car.png"

segmentation_map = Image.open(requests.get(mask_url, stream=True).raw).convert("1")

input_points = [[[450, 600]]] # 2D location of a window in the image

inputs = processor(raw_image, input_points=input_points, segmentation_maps=segmentation_map, return_tensors="pt").to(device)

with torch.no_grad():

outputs = model(**inputs)

masks = processor.image_processor.post_process_masks(

outputs.pred_masks.cpu(), inputs["original_sizes"].cpu(), inputs["reshaped_input_sizes"].cpu()

)

scores = outputs.iou_scores

Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with SAM.

- Demo notebook for using the model.

- Demo notebook for using the automatic mask generation pipeline.

- Demo notebook for inference with MedSAM, a fine-tuned version of SAM on the medical domain. 🌎

- Demo notebook for fine-tuning the model on custom data. 🌎

SlimSAM

SlimSAM, a pruned version of SAM, was proposed in 0.1% Data Makes Segment Anything Slim by Zigeng Chen et al. SlimSAM reduces the size of the SAM models considerably while maintaining the same performance.

Checkpoints can be found on the hub, and they can be used as a drop-in replacement of SAM.

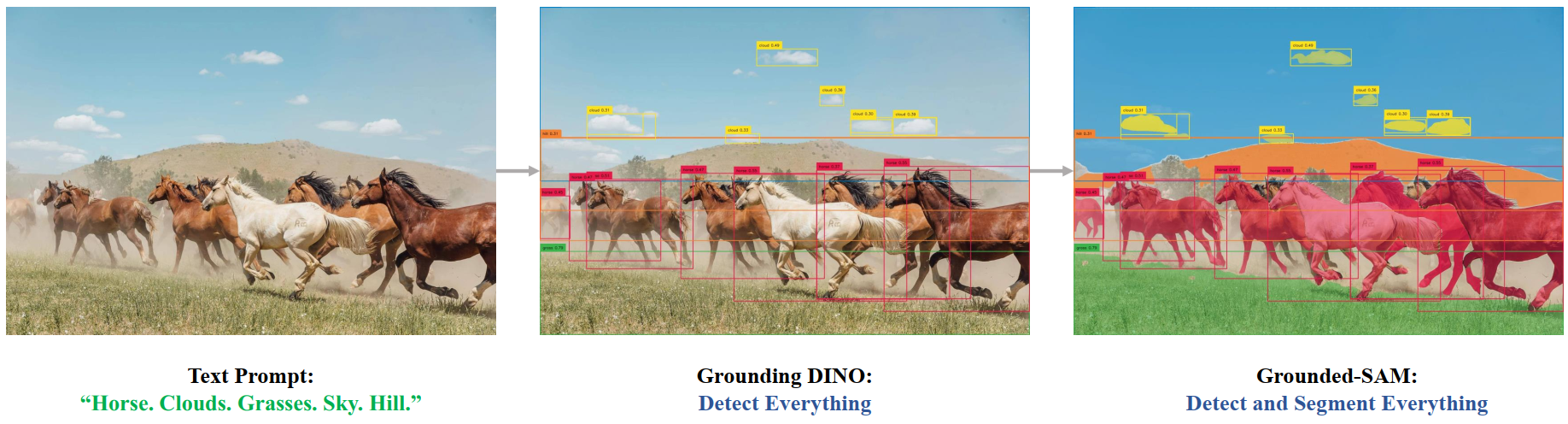

Grounded SAM

One can combine Grounding DINO with SAM for text-based mask generation as introduced in Grounded SAM: Assembling Open-World Models for Diverse Visual Tasks. You can refer to this demo notebook 🌍 for details.

Grounded SAM overview. Taken from the original repository.

SamConfig

autodoc SamConfig

SamVisionConfig

autodoc SamVisionConfig

SamMaskDecoderConfig

autodoc SamMaskDecoderConfig

SamPromptEncoderConfig

autodoc SamPromptEncoderConfig

SamProcessor

autodoc SamProcessor

SamImageProcessor

autodoc SamImageProcessor

SamModel

autodoc SamModel - forward

TFSamModel

autodoc TFSamModel - call