* Fixed typo: insted to instead * Fixed typo: relase to release * Fixed typo: nighlty to nightly * Fixed typos: versatible, benchamarks, becnhmark to versatile, benchmark, benchmarks * Fixed typo in comment: quantizd to quantized * Fixed typo: architecutre to architecture * Fixed typo: contibution to contribution * Fixed typo: Presequities to Prerequisites * Fixed typo: faste to faster * Fixed typo: extendeding to extending * Fixed typo: segmetantion_maps to segmentation_maps * Fixed typo: Alternativelly to Alternatively * Fixed incorrectly defined variable: output to output_disabled * Fixed typo in library name: tranformers.onnx to transformers.onnx * Fixed missing import: import tensorflow as tf * Fixed incorrectly defined variable: token_tensor to tokens_tensor * Fixed missing import: import torch * Fixed incorrectly defined variable and typo: uromaize to uromanize * Fixed incorrectly defined variable and typo: uromaize to uromanize * Fixed typo in function args: numpy.ndarry to numpy.ndarray * Fixed Inconsistent Library Name: Torchscript to TorchScript * Fixed Inconsistent Class Name: OneformerProcessor to OneFormerProcessor * Fixed Inconsistent Class Named Typo: TFLNetForMultipleChoice to TFXLNetForMultipleChoice * Fixed Inconsistent Library Name Typo: Pytorch to PyTorch * Fixed Inconsistent Function Name Typo: captureWarning to captureWarnings * Fixed Inconsistent Library Name Typo: Pytorch to PyTorch * Fixed Inconsistent Class Name Typo: TrainingArgument to TrainingArguments * Fixed Inconsistent Model Name Typo: Swin2R to Swin2SR * Fixed Inconsistent Model Name Typo: EART to BERT * Fixed Inconsistent Library Name Typo: TensorFLow to TensorFlow * Fixed Broken Link for Speech Emotion Classification with Wav2Vec2 * Fixed minor missing word Typo * Fixed minor missing word Typo * Fixed minor missing word Typo * Fixed minor missing word Typo * Fixed minor missing word Typo * Fixed minor missing word Typo * Fixed minor missing word Typo * Fixed minor missing word Typo * Fixed Punctuation: Two commas * Fixed Punctuation: No Space between XLM-R and is * Fixed Punctuation: No Space between [~accelerate.Accelerator.backward] and method * Added backticks to display model.fit() in codeblock * Added backticks to display openai-community/gpt2 in codeblock * Fixed Minor Typo: will to with * Fixed Minor Typo: is to are * Fixed Minor Typo: in to on * Fixed Minor Typo: inhibits to exhibits * Fixed Minor Typo: they need to it needs * Fixed Minor Typo: cast the load the checkpoints To load the checkpoints * Fixed Inconsistent Class Name Typo: TFCamembertForCasualLM to TFCamembertForCausalLM * Fixed typo in attribute name: outputs.last_hidden_states to outputs.last_hidden_state * Added missing verbosity level: fatal * Fixed Minor Typo: take To takes * Fixed Minor Typo: heuristic To heuristics * Fixed Minor Typo: setting To settings * Fixed Minor Typo: Content To Contents * Fixed Minor Typo: millions To million * Fixed Minor Typo: difference To differences * Fixed Minor Typo: while extract To which extracts * Fixed Minor Typo: Hereby To Here * Fixed Minor Typo: addition To additional * Fixed Minor Typo: supports To supported * Fixed Minor Typo: so that benchmark results TO as a consequence, benchmark * Fixed Minor Typo: a To an * Fixed Minor Typo: a To an * Fixed Minor Typo: Chain-of-though To Chain-of-thought

6.1 KiB

OneFormer

Overview

The OneFormer model was proposed in OneFormer: One Transformer to Rule Universal Image Segmentation by Jitesh Jain, Jiachen Li, MangTik Chiu, Ali Hassani, Nikita Orlov, Humphrey Shi. OneFormer is a universal image segmentation framework that can be trained on a single panoptic dataset to perform semantic, instance, and panoptic segmentation tasks. OneFormer uses a task token to condition the model on the task in focus, making the architecture task-guided for training, and task-dynamic for inference.

The abstract from the paper is the following:

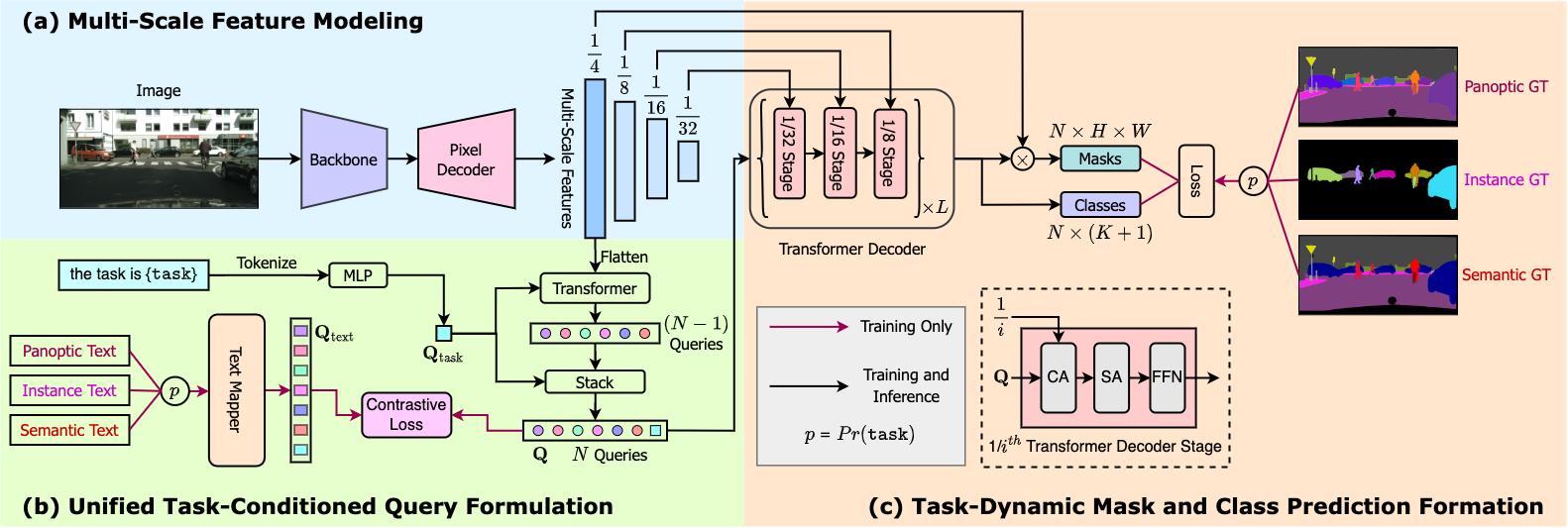

Universal Image Segmentation is not a new concept. Past attempts to unify image segmentation in the last decades include scene parsing, panoptic segmentation, and, more recently, new panoptic architectures. However, such panoptic architectures do not truly unify image segmentation because they need to be trained individually on the semantic, instance, or panoptic segmentation to achieve the best performance. Ideally, a truly universal framework should be trained only once and achieve SOTA performance across all three image segmentation tasks. To that end, we propose OneFormer, a universal image segmentation framework that unifies segmentation with a multi-task train-once design. We first propose a task-conditioned joint training strategy that enables training on ground truths of each domain (semantic, instance, and panoptic segmentation) within a single multi-task training process. Secondly, we introduce a task token to condition our model on the task at hand, making our model task-dynamic to support multi-task training and inference. Thirdly, we propose using a query-text contrastive loss during training to establish better inter-task and inter-class distinctions. Notably, our single OneFormer model outperforms specialized Mask2Former models across all three segmentation tasks on ADE20k, CityScapes, and COCO, despite the latter being trained on each of the three tasks individually with three times the resources. With new ConvNeXt and DiNAT backbones, we observe even more performance improvement. We believe OneFormer is a significant step towards making image segmentation more universal and accessible.

The figure below illustrates the architecture of OneFormer. Taken from the original paper.

This model was contributed by Jitesh Jain. The original code can be found here.

Usage tips

- OneFormer requires two inputs during inference: image and task token.

- During training, OneFormer only uses panoptic annotations.

- If you want to train the model in a distributed environment across multiple nodes, then one should update the

get_num_masksfunction inside in theOneFormerLossclass ofmodeling_oneformer.py. When training on multiple nodes, this should be set to the average number of target masks across all nodes, as can be seen in the original implementation here. - One can use [

OneFormerProcessor] to prepare input images and task inputs for the model and optional targets for the model. [OneFormerProcessor] wraps [OneFormerImageProcessor] and [CLIPTokenizer] into a single instance to both prepare the images and encode the task inputs. - To get the final segmentation, depending on the task, you can call [

~OneFormerProcessor.post_process_semantic_segmentation] or [~OneFormerImageProcessor.post_process_instance_segmentation] or [~OneFormerImageProcessor.post_process_panoptic_segmentation]. All three tasks can be solved using [OneFormerForUniversalSegmentation] output, panoptic segmentation accepts an optionallabel_ids_to_fuseargument to fuse instances of the target object/s (e.g. sky) together.

Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with OneFormer.

- Demo notebooks regarding inference + fine-tuning on custom data can be found here.

If you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we will review it. The resource should ideally demonstrate something new instead of duplicating an existing resource.

OneFormer specific outputs

autodoc models.oneformer.modeling_oneformer.OneFormerModelOutput

autodoc models.oneformer.modeling_oneformer.OneFormerForUniversalSegmentationOutput

OneFormerConfig

autodoc OneFormerConfig

OneFormerImageProcessor

autodoc OneFormerImageProcessor - preprocess - encode_inputs - post_process_semantic_segmentation - post_process_instance_segmentation - post_process_panoptic_segmentation

OneFormerProcessor

autodoc OneFormerProcessor

OneFormerModel

autodoc OneFormerModel - forward

OneFormerForUniversalSegmentation

autodoc OneFormerForUniversalSegmentation - forward