* initial design * update all video processors * add tests * need to add qwen2-vl (not tested yet) * add qwen2-vl in auto map * fix copies * isort * resolve confilicts kinda * nit: * qwen2-vl is happy now * qwen2-5 happy * other models are happy * fix copies * fix tests * add docs * CI green now? * add more tests * even more changes + tests * doc builder fail * nit * Update src/transformers/models/auto/processing_auto.py Co-authored-by: Pavel Iakubovskii <qubvel@gmail.com> * small update * imports correctly * dump, otherwise this is getting unmanagebale T-T * dump * update * another update * update * tests * move * modular * docs * test * another update * init * remove flakiness in tests * fixup * clean up and remove commented lines * docs * skip this one! * last fix after rebasing * run fixup * delete slow files * remove unnecessary tests + clean up a bit * small fixes * fix tests * more updates * docs * fix tests * update * style * fix qwen2-5-vl * fixup * fixup * unflatten batch when preparing * dump, come back soon * add docs and fix some tests * how to guard this with new dummies? * chat templates in qwen * address some comments * remove `Fast` suffix * fixup * oops should be imported from transforms * typo in requires dummies * new model added with video support * fixup once more * last fixup I hope * revert image processor name + comments * oh, this is why fetch test is failing * fix tests * fix more tests * fixup * add new models: internvl, smolvlm * update docs * imprt once * fix failing tests * do we need to guard it here again, why? * new model was added, update it * remove testcase from tester * fix tests * make style * not related CI fail, lets' just fix here * mark flaky for now, filas 15 out of 100 * style * maybe we can do this way? * don't download images in setup class --------- Co-authored-by: Pavel Iakubovskii <qubvel@gmail.com>

5.0 KiB

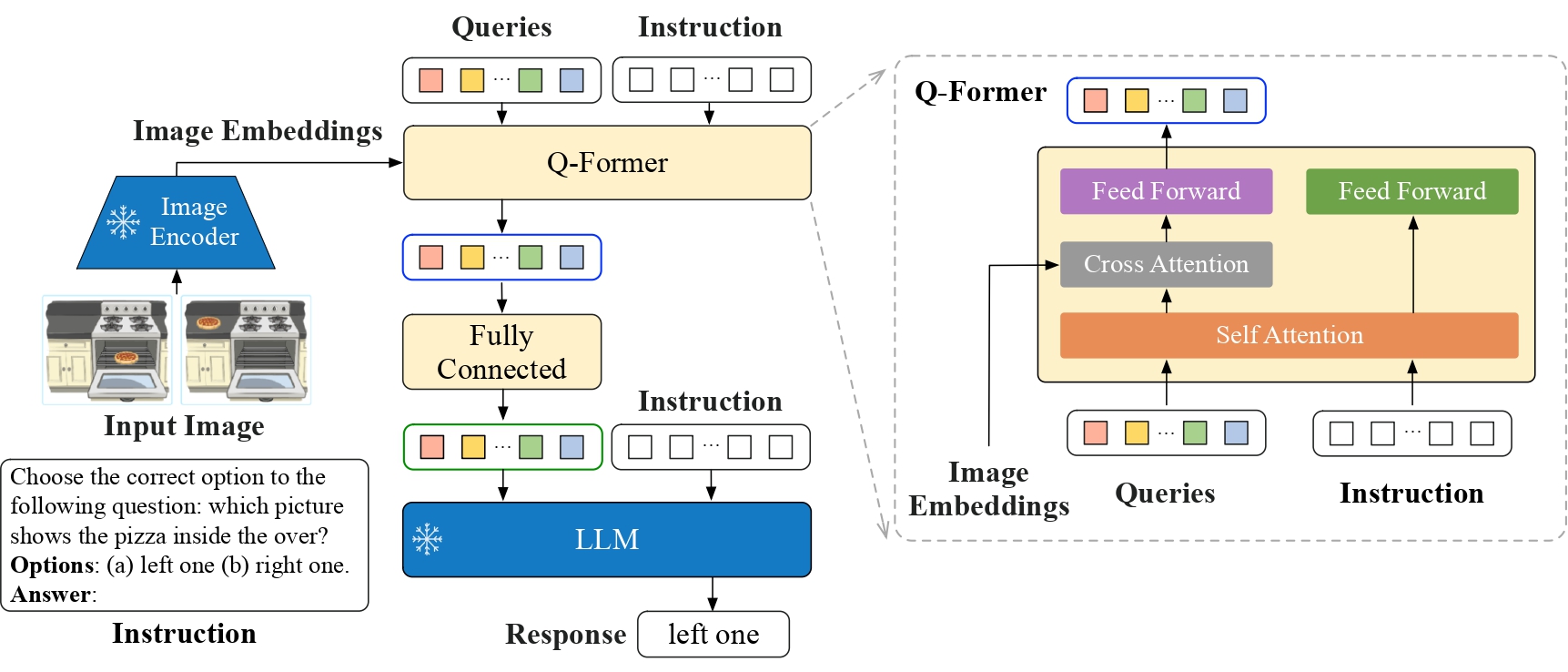

InstructBlipVideo

Overview

The InstructBLIPVideo is an extension of the models proposed in InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning by Wenliang Dai, Junnan Li, Dongxu Li, Anthony Meng Huat Tiong, Junqi Zhao, Weisheng Wang, Boyang Li, Pascale Fung, Steven Hoi. InstructBLIPVideo uses the same architecture as InstructBLIP and works with the same checkpoints as InstructBLIP. The only difference is the ability to process videos.

The abstract from the paper is the following:

General-purpose language models that can solve various language-domain tasks have emerged driven by the pre-training and instruction-tuning pipeline. However, building general-purpose vision-language models is challenging due to the increased task discrepancy introduced by the additional visual input. Although vision-language pre-training has been widely studied, vision-language instruction tuning remains relatively less explored. In this paper, we conduct a systematic and comprehensive study on vision-language instruction tuning based on the pre-trained BLIP-2 models. We gather a wide variety of 26 publicly available datasets, transform them into instruction tuning format and categorize them into two clusters for held-in instruction tuning and held-out zero-shot evaluation. Additionally, we introduce instruction-aware visual feature extraction, a crucial method that enables the model to extract informative features tailored to the given instruction. The resulting InstructBLIP models achieve state-of-the-art zero-shot performance across all 13 held-out datasets, substantially outperforming BLIP-2 and the larger Flamingo. Our models also lead to state-of-the-art performance when finetuned on individual downstream tasks (e.g., 90.7% accuracy on ScienceQA IMG). Furthermore, we qualitatively demonstrate the advantages of InstructBLIP over concurrent multimodal models.

InstructBLIPVideo architecture. Taken from the original paper.

This model was contributed by RaushanTurganbay. The original code can be found here.

Usage tips

- The model was trained by sampling 4 frames per video, so it's recommended to sample 4 frames

Note

BLIP models after release v4.46 will raise warnings about adding

processor.num_query_tokens = {{num_query_tokens}}and expand model embeddings layer to add special<image>token. It is strongly recommended to add the attributes to the processor if you own the model checkpoint, or open a PR if it is not owned by you. Adding these attributes means that BLIP will add the number of query tokens required per image and expand the text with as many<image>placeholders as there will be query tokens. Usually it is around 500 tokens per image, so make sure that the text is not truncated as otherwise there wil be failure when merging the embeddings. The attributes can be obtained from model config, asmodel.config.num_query_tokensand model embeddings expansion can be done by following this link.

InstructBlipVideoConfig

autodoc InstructBlipVideoConfig - from_vision_qformer_text_configs

InstructBlipVideoVisionConfig

autodoc InstructBlipVideoVisionConfig

InstructBlipVideoQFormerConfig

autodoc InstructBlipVideoQFormerConfig

InstructBlipVideoProcessor

autodoc InstructBlipVideoProcessor

InstructBlipVideoVideoProcessor

autodoc InstructBlipVideoVideoProcessor - preprocess

InstructBlipVideoImageProcessor

autodoc InstructBlipVideoImageProcessor - preprocess

InstructBlipVideoVisionModel

autodoc InstructBlipVideoVisionModel - forward

InstructBlipVideoQFormerModel

autodoc InstructBlipVideoQFormerModel - forward

InstructBlipVideoModel

autodoc InstructBlipVideoModel - forward

InstructBlipVideoForConditionalGeneration

autodoc InstructBlipVideoForConditionalGeneration - forward - generate