* Squash 88 commits * Use markdown * Remove mdx files due to bad rebase * Fix modeling files due to bad rebase * Fix style * Update comment * fix --------- Co-authored-by: ydshieh <ydshieh@users.noreply.github.com>

3.6 KiB

InstructBLIP

Overview

The InstructBLIP model was proposed in InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning by Wenliang Dai, Junnan Li, Dongxu Li, Anthony Meng Huat Tiong, Junqi Zhao, Weisheng Wang, Boyang Li, Pascale Fung, Steven Hoi. InstructBLIP leverages the BLIP-2 architecture for visual instruction tuning.

The abstract from the paper is the following:

General-purpose language models that can solve various language-domain tasks have emerged driven by the pre-training and instruction-tuning pipeline. However, building general-purpose vision-language models is challenging due to the increased task discrepancy introduced by the additional visual input. Although vision-language pre-training has been widely studied, vision-language instruction tuning remains relatively less explored. In this paper, we conduct a systematic and comprehensive study on vision-language instruction tuning based on the pre-trained BLIP-2 models. We gather a wide variety of 26 publicly available datasets, transform them into instruction tuning format and categorize them into two clusters for held-in instruction tuning and held-out zero-shot evaluation. Additionally, we introduce instruction-aware visual feature extraction, a crucial method that enables the model to extract informative features tailored to the given instruction. The resulting InstructBLIP models achieve state-of-the-art zero-shot performance across all 13 held-out datasets, substantially outperforming BLIP-2 and the larger Flamingo. Our models also lead to state-of-the-art performance when finetuned on individual downstream tasks (e.g., 90.7% accuracy on ScienceQA IMG). Furthermore, we qualitatively demonstrate the advantages of InstructBLIP over concurrent multimodal models.

Tips:

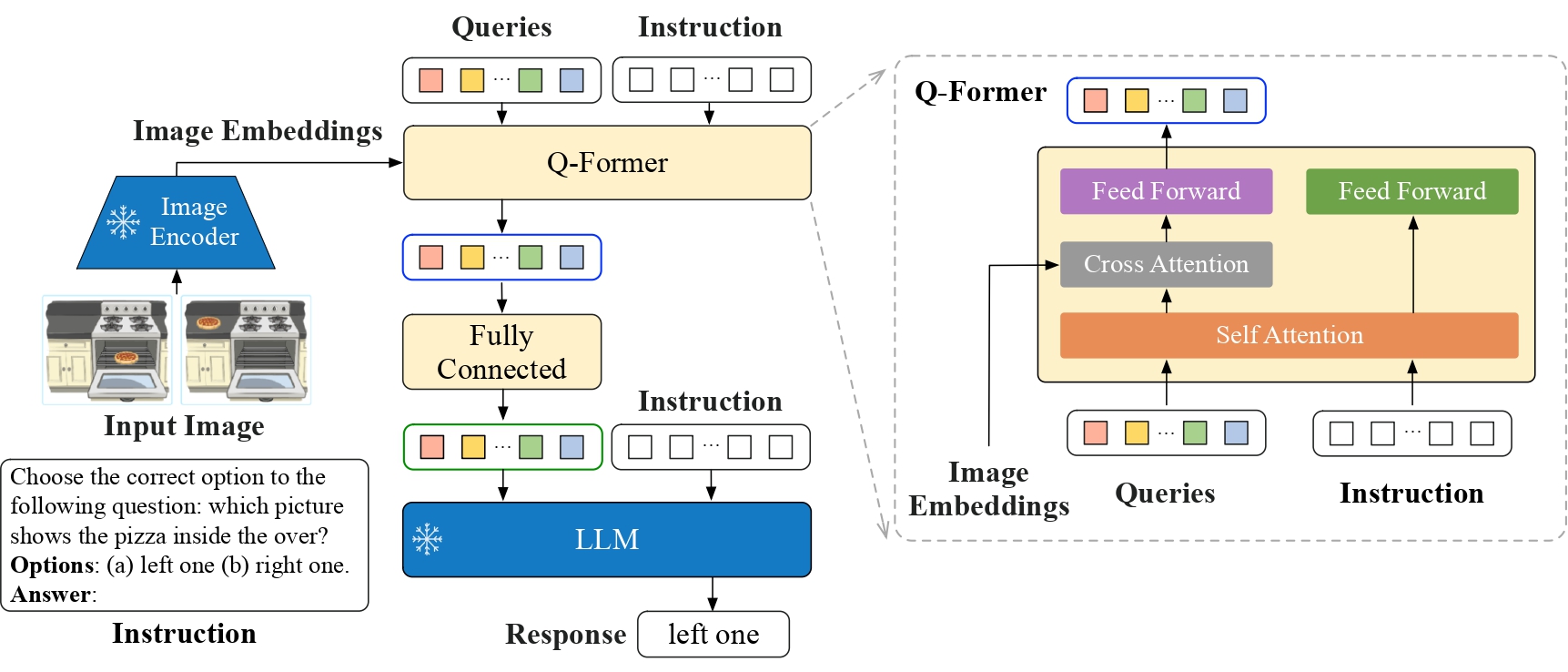

- InstructBLIP uses the same architecture as BLIP-2 with a tiny but important difference: it also feeds the text prompt (instruction) to the Q-Former.

InstructBLIP architecture. Taken from the original paper.

This model was contributed by nielsr. The original code can be found here.

InstructBlipConfig

autodoc InstructBlipConfig - from_vision_qformer_text_configs

InstructBlipVisionConfig

autodoc InstructBlipVisionConfig

InstructBlipQFormerConfig

autodoc InstructBlipQFormerConfig

InstructBlipProcessor

autodoc InstructBlipProcessor

InstructBlipVisionModel

autodoc InstructBlipVisionModel - forward

InstructBlipQFormerModel

autodoc InstructBlipQFormerModel - forward

InstructBlipForConditionalGeneration

autodoc InstructBlipForConditionalGeneration - forward - generate