* First draft

* More improvements

* More improvements

* More fixes

* Fix copies

* More improvements

* More fixes

* More improvements

* Convert checkpoint

* More improvements, set up tests

* Fix more tests

* Add UdopModel

* More improvements

* Fix equivalence test

* More fixes

* Redesign model

* Extend conversion script

* Use real inputs for conversion script

* Add image processor

* Improve conversion script

* Add UdopTokenizer

* Add fast tokenizer

* Add converter

* Update README's

* Add processor

* Add fully fledged tokenizer

* Add fast tokenizer

* Use processor in conversion script

* Add tokenizer tests

* Fix one more test

* Fix more tests

* Fix tokenizer tests

* Enable fast tokenizer tests

* Fix more tests

* Fix additional_special_tokens of fast tokenizer

* Fix tokenizer tests

* Fix more tests

* Fix equivalence test

* Rename image to pixel_values

* Rename seg_data to bbox

* More renamings

* Remove vis_special_token

* More improvements

* Add docs

* Fix copied from

* Update slow tokenizer

* Update fast tokenizer design

* Make text input optional

* Add first draft of processor tests

* Fix more processor tests

* Fix decoder_start_token_id

* Fix test_initialization

* Add integration test

* More improvements

* Improve processor, add test

* Add more copied from

* Add more copied from

* Add more copied from

* Add more copied from

* Remove print statement

* Update README and auto mapping

* Delete files

* Delete another file

* Remove code

* Fix test

* Fix docs

* Remove asserts

* Add doc tests

* Include UDOP in exotic model tests

* Add expected tesseract decodings

* Add sentencepiece

* Use same design as T5

* Add UdopEncoderModel

* Add UdopEncoderModel to tests

* More fixes

* Fix fast tokenizer

* Fix one more test

* Remove parallelisable attribute

* Fix copies

* Remove legacy file

* Copy from T5Tokenizer

* Fix rebase

* More fixes, copy from T5

* More fixes

* Fix init

* Use ArthurZ/udop for tests

* Make all model tests pass

* Remove UdopForConditionalGeneration from auto mapping

* Fix more tests

* fixups

* more fixups

* fix the tokenizers

* remove un-necessary changes

* nits

* nits

* replace truncate_sequences_boxes with truncate_sequences for fix-copies

* nit current path

* add a test for input ids

* ids that we should get taken from c9f7a32f57

* nits converting

* nits

* apply ruff

* nits

* nits

* style

* fix slow order of addition

* fix udop fast range as well

* fixup

* nits

* Add docstrings

* Fix gradient checkpointing

* Update code examples

* Skip tests

* Update integration test

* Address comment

* Make fixup

* Remove extra ids from tokenizer

* Skip test

* Apply suggestions from code review

Co-authored-by: Arthur <48595927+ArthurZucker@users.noreply.github.com>

* Update year

* Address comment

* Address more comments

* Address comments

* Add copied from

* Update CI

* Rename script

* Update model id

* Add AddedToken, skip tests

* Update CI

* Fix doc tests

* Do not use Tesseract for the doc tests

* Remove kwargs

* Add original inputs

* Update casting

* Fix doc test

* Update question

* Update question

* Use LayoutLMv3ImageProcessor

* Update organization

* Improve docs

* Update forward signature

* Make images optional

* Remove deprecated device argument

* Add comment, add add_prefix_space

* More improvements

* Remove kwargs

---------

Co-authored-by: ArthurZucker <arthur.zucker@gmail.com>

Co-authored-by: Arthur <48595927+ArthurZucker@users.noreply.github.com>

5.1 KiB

UDOP

Overview

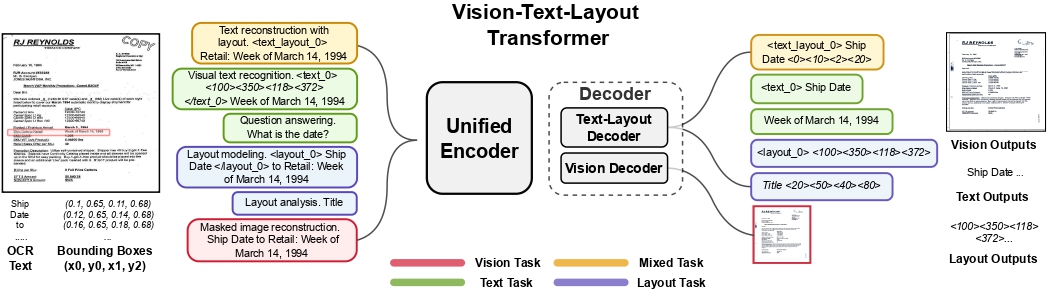

The UDOP model was proposed in Unifying Vision, Text, and Layout for Universal Document Processing by Zineng Tang, Ziyi Yang, Guoxin Wang, Yuwei Fang, Yang Liu, Chenguang Zhu, Michael Zeng, Cha Zhang, Mohit Bansal. UDOP adopts an encoder-decoder Transformer architecture based on T5 for document AI tasks like document image classification, document parsing and document visual question answering.

The abstract from the paper is the following:

We propose Universal Document Processing (UDOP), a foundation Document AI model which unifies text, image, and layout modalities together with varied task formats, including document understanding and generation. UDOP leverages the spatial correlation between textual content and document image to model image, text, and layout modalities with one uniform representation. With a novel Vision-Text-Layout Transformer, UDOP unifies pretraining and multi-domain downstream tasks into a prompt-based sequence generation scheme. UDOP is pretrained on both large-scale unlabeled document corpora using innovative self-supervised objectives and diverse labeled data. UDOP also learns to generate document images from text and layout modalities via masked image reconstruction. To the best of our knowledge, this is the first time in the field of document AI that one model simultaneously achieves high-quality neural document editing and content customization. Our method sets the state-of-the-art on 9 Document AI tasks, e.g., document understanding and QA, across diverse data domains like finance reports, academic papers, and websites. UDOP ranks first on the leaderboard of the Document Understanding Benchmark (DUE).*

UDOP architecture. Taken from the original paper.

Usage tips

- In addition to input_ids, [

UdopForConditionalGeneration] also expects the inputbbox, which are the bounding boxes (i.e. 2D-positions) of the input tokens. These can be obtained using an external OCR engine such as Google's Tesseract (there's a Python wrapper available). Each bounding box should be in (x0, y0, x1, y1) format, where (x0, y0) corresponds to the position of the upper left corner in the bounding box, and (x1, y1) represents the position of the lower right corner. Note that one first needs to normalize the bounding boxes to be on a 0-1000 scale. To normalize, you can use the following function:

def normalize_bbox(bbox, width, height):

return [

int(1000 * (bbox[0] / width)),

int(1000 * (bbox[1] / height)),

int(1000 * (bbox[2] / width)),

int(1000 * (bbox[3] / height)),

]

Here, width and height correspond to the width and height of the original document in which the token

occurs. Those can be obtained using the Python Image Library (PIL) library for example, as follows:

from PIL import Image

# Document can be a png, jpg, etc. PDFs must be converted to images.

image = Image.open(name_of_your_document).convert("RGB")

width, height = image.size

- At inference time, it's recommended to use the

generatemethod to autoregressively generate text given a document image. - One can use [

UdopProcessor] to prepare images and text for the model. By default, this class uses the Tesseract engine to extract a list of words and boxes (coordinates) from a given document. Its functionality is equivalent to that of [LayoutLMv3Processor], hence it supports passing eitherapply_ocr=Falsein case you prefer to use your own OCR engine orapply_ocr=Truein case you want the default OCR engine to be used.

This model was contributed by nielsr. The original code can be found here.

UdopConfig

autodoc UdopConfig

UdopTokenizer

autodoc UdopTokenizer - build_inputs_with_special_tokens - get_special_tokens_mask - create_token_type_ids_from_sequences - save_vocabulary

UdopTokenizerFast

autodoc UdopTokenizerFast

UdopProcessor

autodoc UdopProcessor - call

UdopModel

autodoc UdopModel - forward

UdopForConditionalGeneration

autodoc UdopForConditionalGeneration - forward

UdopEncoderModel

autodoc UdopEncoderModel - forward