25 KiB

English | 简体中文 | 繁體中文 | 한국어 | Español | 日本語 | हिन्दी | Русский | Рortuguês | తెలుగు | Français | Deutsch | Tiếng Việt | العربية | اردو |

JAX、PyTorch、TensorFlowのための最先端機械学習

🤗Transformersは、テキスト、視覚、音声などの異なるモダリティに対してタスクを実行するために、事前に学習させた数千のモデルを提供します。

これらのモデルは次のような場合に適用できます:

- 📝 テキストは、テキストの分類、情報抽出、質問応答、要約、翻訳、テキスト生成などのタスクのために、100以上の言語に対応しています。

- 🖼️ 画像分類、物体検出、セグメンテーションなどのタスクのための画像。

- 🗣️ 音声は、音声認識や音声分類などのタスクに使用します。

トランスフォーマーモデルは、テーブル質問応答、光学文字認識、スキャン文書からの情報抽出、ビデオ分類、視覚的質問応答など、複数のモダリティを組み合わせたタスクも実行可能です。

🤗Transformersは、与えられたテキストに対してそれらの事前学習されたモデルを素早くダウンロードして使用し、あなた自身のデータセットでそれらを微調整し、私たちのmodel hubでコミュニティと共有するためのAPIを提供します。同時に、アーキテクチャを定義する各Pythonモジュールは完全にスタンドアロンであり、迅速な研究実験を可能にするために変更することができます。

🤗TransformersはJax、PyTorch、TensorFlowという3大ディープラーニングライブラリーに支えられ、それぞれのライブラリをシームレスに統合しています。片方でモデルを学習してから、もう片方で推論用にロードするのは簡単なことです。

オンラインデモ

model hubから、ほとんどのモデルのページで直接テストすることができます。また、パブリックモデル、プライベートモデルに対して、プライベートモデルのホスティング、バージョニング、推論APIを提供しています。

以下はその一例です:

自然言語処理にて:

- BERTによるマスクドワード補完

- Electraによる名前実体認識

- GPT-2によるテキスト生成

- RoBERTaによる自然言語推論

- BARTによる要約

- DistilBERTによる質問応答

- T5による翻訳

コンピュータビジョンにて:

オーディオにて:

マルチモーダルなタスクにて:

Hugging Faceチームによって作られた トランスフォーマーを使った書き込み は、このリポジトリのテキスト生成機能の公式デモである。

Hugging Faceチームによるカスタム・サポートをご希望の場合

クイックツアー

与えられた入力(テキスト、画像、音声、...)に対してすぐにモデルを使うために、我々はpipelineというAPIを提供しております。pipelineは、学習済みのモデルと、そのモデルの学習時に使用された前処理をグループ化したものです。以下は、肯定的なテキストと否定的なテキストを分類するためにpipelineを使用する方法です:

>>> from transformers import pipeline

# Allocate a pipeline for sentiment-analysis

>>> classifier = pipeline('sentiment-analysis')

>>> classifier('We are very happy to introduce pipeline to the transformers repository.')

[{'label': 'POSITIVE', 'score': 0.9996980428695679}]

2行目のコードでは、pipelineで使用される事前学習済みモデルをダウンロードしてキャッシュし、3行目では与えられたテキストに対してそのモデルを評価します。ここでは、答えは99.97%の信頼度で「ポジティブ」です。

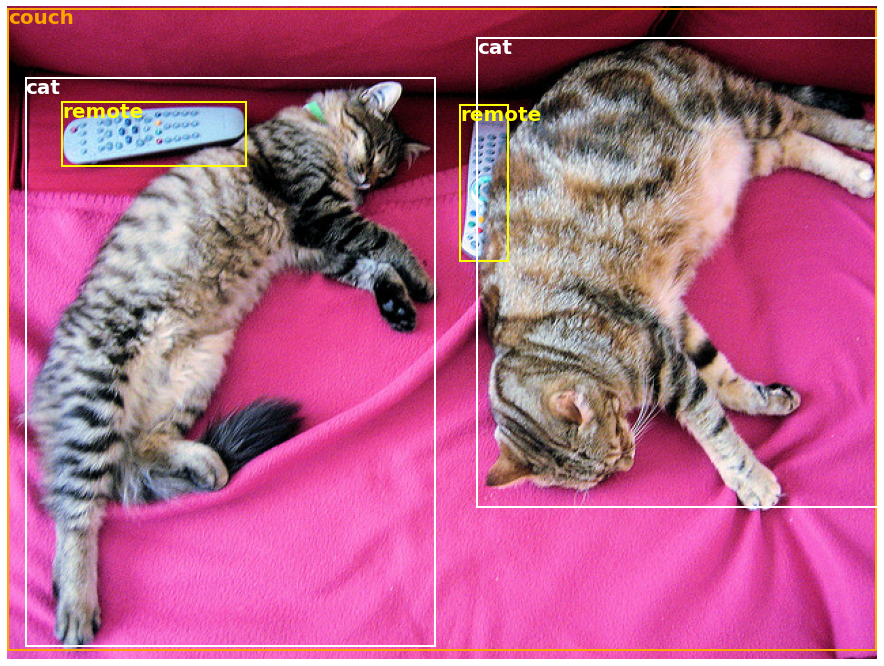

自然言語処理だけでなく、コンピュータビジョンや音声処理においても、多くのタスクにはあらかじめ訓練されたpipelineが用意されている。例えば、画像から検出された物体を簡単に抽出することができる:

>>> import requests

>>> from PIL import Image

>>> from transformers import pipeline

# Download an image with cute cats

>>> url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/coco_sample.png"

>>> image_data = requests.get(url, stream=True).raw

>>> image = Image.open(image_data)

# Allocate a pipeline for object detection

>>> object_detector = pipeline('object-detection')

>>> object_detector(image)

[{'score': 0.9982201457023621,

'label': 'remote',

'box': {'xmin': 40, 'ymin': 70, 'xmax': 175, 'ymax': 117}},

{'score': 0.9960021376609802,

'label': 'remote',

'box': {'xmin': 333, 'ymin': 72, 'xmax': 368, 'ymax': 187}},

{'score': 0.9954745173454285,

'label': 'couch',

'box': {'xmin': 0, 'ymin': 1, 'xmax': 639, 'ymax': 473}},

{'score': 0.9988006353378296,

'label': 'cat',

'box': {'xmin': 13, 'ymin': 52, 'xmax': 314, 'ymax': 470}},

{'score': 0.9986783862113953,

'label': 'cat',

'box': {'xmin': 345, 'ymin': 23, 'xmax': 640, 'ymax': 368}}]

ここでは、画像から検出されたオブジェクトのリストが得られ、オブジェクトを囲むボックスと信頼度スコアが表示されます。左側が元画像、右側が予測結果を表示したものです:

このチュートリアルでは、pipelineAPIでサポートされているタスクについて詳しく説明しています。

pipelineに加えて、与えられたタスクに学習済みのモデルをダウンロードして使用するために必要なのは、3行のコードだけです。以下はPyTorchのバージョンです:

>>> from transformers import AutoTokenizer, AutoModel

>>> tokenizer = AutoTokenizer.from_pretrained("google-bert/bert-base-uncased")

>>> model = AutoModel.from_pretrained("google-bert/bert-base-uncased")

>>> inputs = tokenizer("Hello world!", return_tensors="pt")

>>> outputs = model(**inputs)

そしてこちらはTensorFlowと同等のコードとなります:

>>> from transformers import AutoTokenizer, TFAutoModel

>>> tokenizer = AutoTokenizer.from_pretrained("google-bert/bert-base-uncased")

>>> model = TFAutoModel.from_pretrained("google-bert/bert-base-uncased")

>>> inputs = tokenizer("Hello world!", return_tensors="tf")

>>> outputs = model(**inputs)

トークナイザは学習済みモデルが期待するすべての前処理を担当し、単一の文字列 (上記の例のように) またはリストに対して直接呼び出すことができます。これは下流のコードで使用できる辞書を出力します。また、単純に ** 引数展開演算子を使用してモデルに直接渡すこともできます。

モデル自体は通常のPytorch nn.Module または TensorFlow tf.keras.Model (バックエンドによって異なる)で、通常通り使用することが可能です。このチュートリアルでは、このようなモデルを従来のPyTorchやTensorFlowの学習ループに統合する方法や、私たちのTrainerAPIを使って新しいデータセットで素早く微調整を行う方法について説明します。

なぜtransformersを使う必要があるのでしょうか?

-

使いやすい最新モデル:

- 自然言語理解・生成、コンピュータビジョン、オーディオの各タスクで高いパフォーマンスを発揮します。

- 教育者、実務者にとっての低い参入障壁。

- 学習するクラスは3つだけで、ユーザが直面する抽象化はほとんどありません。

- 学習済みモデルを利用するための統一されたAPI。

-

低い計算コスト、少ないカーボンフットプリント:

- 研究者は、常に再トレーニングを行うのではなく、トレーニングされたモデルを共有することができます。

- 実務家は、計算時間や生産コストを削減することができます。

- すべてのモダリティにおいて、60,000以上の事前学習済みモデルを持つ数多くのアーキテクチャを提供します。

-

モデルのライフタイムのあらゆる部分で適切なフレームワークを選択可能:

- 3行のコードで最先端のモデルをトレーニング。

- TF2.0/PyTorch/JAXフレームワーク間で1つのモデルを自在に移動させる。

- 学習、評価、生産に適したフレームワークをシームレスに選択できます。

-

モデルやサンプルをニーズに合わせて簡単にカスタマイズ可能:

- 原著者が発表した結果を再現するために、各アーキテクチャの例を提供しています。

- モデル内部は可能な限り一貫して公開されています。

- モデルファイルはライブラリとは独立して利用することができ、迅速な実験が可能です。

なぜtransformersを使ってはいけないのでしょうか?

- このライブラリは、ニューラルネットのためのビルディングブロックのモジュール式ツールボックスではありません。モデルファイルのコードは、研究者が追加の抽象化/ファイルに飛び込むことなく、各モデルを素早く反復できるように、意図的に追加の抽象化でリファクタリングされていません。

- 学習APIはどのようなモデルでも動作するわけではなく、ライブラリが提供するモデルで動作するように最適化されています。一般的な機械学習のループには、別のライブラリ(おそらくAccelerate)を使用する必要があります。

- 私たちはできるだけ多くの使用例を紹介するよう努力していますが、examples フォルダ にあるスクリプトはあくまで例です。あなたの特定の問題に対してすぐに動作するわけではなく、あなたのニーズに合わせるために数行のコードを変更する必要があることが予想されます。

インストール

pipにて

このリポジトリは、Python 3.9+, Flax 0.4.1+, PyTorch 2.1+, TensorFlow 2.6+ でテストされています。

🤗Transformersは仮想環境にインストールする必要があります。Pythonの仮想環境に慣れていない場合は、ユーザーガイドを確認してください。

まず、使用するバージョンのPythonで仮想環境を作成し、アクティベートします。

その後、Flax, PyTorch, TensorFlowのうち少なくとも1つをインストールする必要があります。 TensorFlowインストールページ、PyTorchインストールページ、Flax、Jaxインストールページで、お使いのプラットフォーム別のインストールコマンドを参照してください。

これらのバックエンドのいずれかがインストールされている場合、🤗Transformersは以下のようにpipを使用してインストールすることができます:

pip install transformers

もしサンプルを試したい、またはコードの最先端が必要で、新しいリリースを待てない場合は、ライブラリをソースからインストールする必要があります。

condaにて

🤗Transformersは以下のようにcondaを使って設置することができます:

conda install conda-forge::transformers

注意:

huggingfaceチャンネルからtransformersをインストールすることは非推奨です。

Flax、PyTorch、TensorFlowをcondaでインストールする方法は、それぞれのインストールページに従ってください。

注意: Windowsでは、キャッシュの恩恵を受けるために、デベロッパーモードを有効にするよう促されることがあります。このような場合は、このissueでお知らせください。

モデルアーキテクチャ

🤗Transformersが提供する 全モデルチェックポイント は、ユーザーや組織によって直接アップロードされるhuggingface.co model hubからシームレスに統合されています。

🤗Transformersは現在、以下のアーキテクチャを提供しています: それぞれのハイレベルな要約はこちらを参照してください.

各モデルがFlax、PyTorch、TensorFlowで実装されているか、🤗Tokenizersライブラリに支えられた関連トークナイザを持っているかは、この表を参照してください。

これらの実装はいくつかのデータセットでテストされており(サンプルスクリプトを参照)、オリジナルの実装の性能と一致するはずである。性能の詳細はdocumentationのExamplesセクションで見ることができます。

さらに詳しく

| セクション | 概要 |

|---|---|

| ドキュメント | 完全なAPIドキュメントとチュートリアル |

| タスク概要 | 🤗Transformersがサポートするタスク |

| 前処理チュートリアル | モデル用のデータを準備するためにTokenizerクラスを使用 |

| トレーニングと微調整 | PyTorch/TensorFlowの学習ループとTrainerAPIで🤗Transformersが提供するモデルを使用 |

| クイックツアー: 微調整/使用方法スクリプト | 様々なタスクでモデルの微調整を行うためのスクリプト例 |

| モデルの共有とアップロード | 微調整したモデルをアップロードしてコミュニティで共有する |

| マイグレーション | pytorch-transformersまたはpytorch-pretrained-bertから🤗Transformers に移行する |

引用

🤗 トランスフォーマーライブラリに引用できる論文が出来ました:

@inproceedings{wolf-etal-2020-transformers,

title = "Transformers: State-of-the-Art Natural Language Processing",

author = "Thomas Wolf and Lysandre Debut and Victor Sanh and Julien Chaumond and Clement Delangue and Anthony Moi and Pierric Cistac and Tim Rault and Rémi Louf and Morgan Funtowicz and Joe Davison and Sam Shleifer and Patrick von Platen and Clara Ma and Yacine Jernite and Julien Plu and Canwen Xu and Teven Le Scao and Sylvain Gugger and Mariama Drame and Quentin Lhoest and Alexander M. Rush",

booktitle = "Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations",

month = oct,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.emnlp-demos.6",

pages = "38--45"

}