5.6 KiB

BLIP-2

Overview

The BLIP-2 model was proposed in BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models by Junnan Li, Dongxu Li, Silvio Savarese, Steven Hoi. BLIP-2 leverages frozen pre-trained image encoders and large language models (LLMs) by training a lightweight, 12-layer Transformer encoder in between them, achieving state-of-the-art performance on various vision-language tasks. Most notably, BLIP-2 improves upon Flamingo, an 80 billion parameter model, by 8.7% on zero-shot VQAv2 with 54x fewer trainable parameters.

The abstract from the paper is the following:

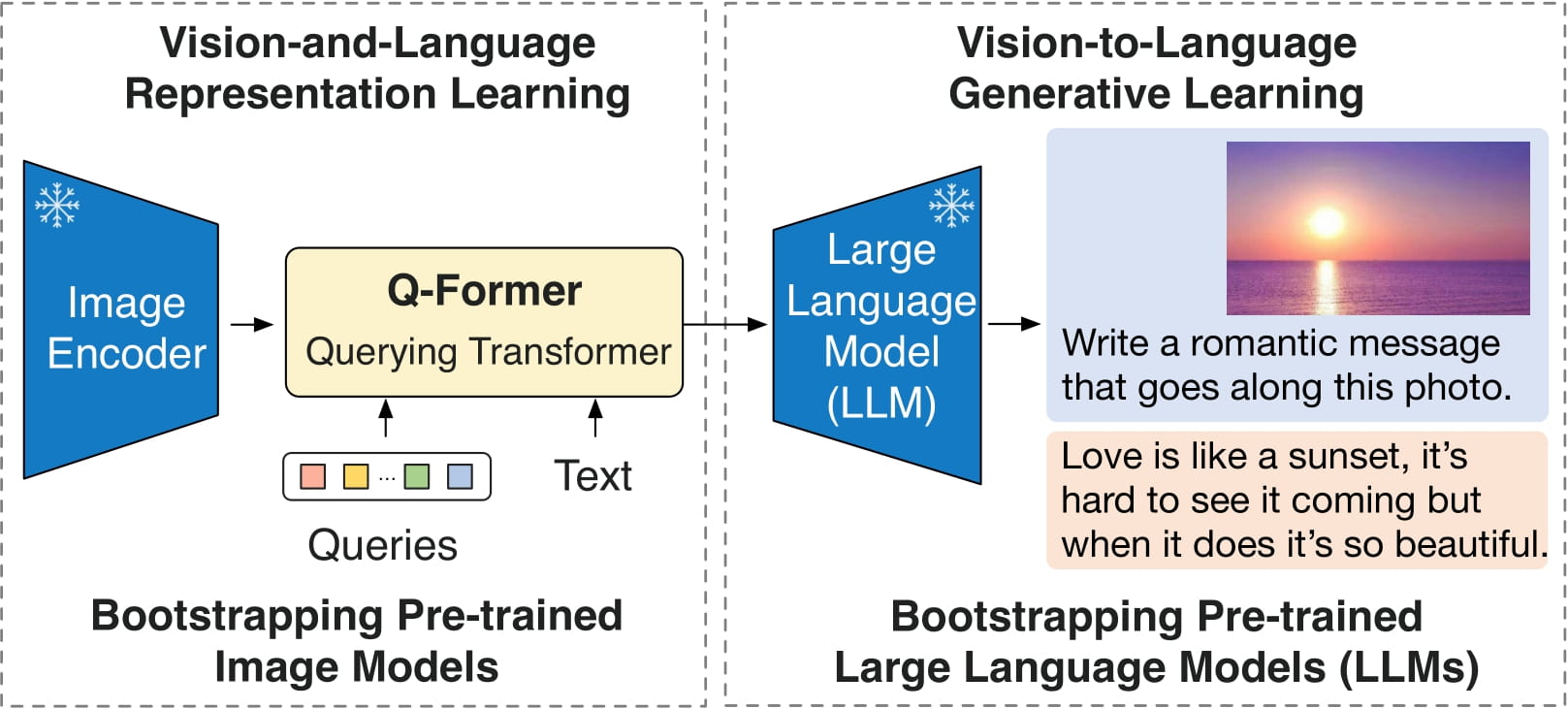

The cost of vision-and-language pre-training has become increasingly prohibitive due to end-to-end training of large-scale models. This paper proposes BLIP-2, a generic and efficient pre-training strategy that bootstraps vision-language pre-training from off-the-shelf frozen pre-trained image encoders and frozen large language models. BLIP-2 bridges the modality gap with a lightweight Querying Transformer, which is pre-trained in two stages. The first stage bootstraps vision-language representation learning from a frozen image encoder. The second stage bootstraps vision-to-language generative learning from a frozen language model. BLIP-2 achieves state-of-the-art performance on various vision-language tasks, despite having significantly fewer trainable parameters than existing methods. For example, our model outperforms Flamingo80B by 8.7% on zero-shot VQAv2 with 54x fewer trainable parameters. We also demonstrate the model's emerging capabilities of zero-shot image-to-text generation that can follow natural language instructions.

BLIP-2 architecture. Taken from the original paper.

This model was contributed by nielsr. The original code can be found here.

Usage tips

- BLIP-2 can be used for conditional text generation given an image and an optional text prompt. At inference time, it's recommended to use the [

generate] method. - One can use [

Blip2Processor] to prepare images for the model, and decode the predicted tokens ID's back to text.

Note

BLIP models after release v4.46 will raise warnings about adding

processor.num_query_tokens = {{num_query_tokens}}and expand model embeddings layer to add special<image>token. It is strongly recommended to add the attributes to the processor if you own the model checkpoint, or open a PR if it is not owned by you. Adding these attributes means that BLIP will add the number of query tokens required per image and expand the text with as many<image>placeholders as there will be query tokens. Usually it is around 500 tokens per image, so make sure that the text is not truncated as otherwise there wil be failure when merging the embeddings. The attributes can be obtained from model config, asmodel.config.num_query_tokensand model embeddings expansion can be done by following this link.

Resources

A list of official Hugging Face and community (indicated by 🌎) resources to help you get started with BLIP-2.

- Demo notebooks for BLIP-2 for image captioning, visual question answering (VQA) and chat-like conversations can be found here.

If you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

Blip2Config

autodoc Blip2Config - from_vision_qformer_text_configs

Blip2VisionConfig

autodoc Blip2VisionConfig

Blip2QFormerConfig

autodoc Blip2QFormerConfig

Blip2Processor

autodoc Blip2Processor

Blip2VisionModel

autodoc Blip2VisionModel - forward

Blip2QFormerModel

autodoc Blip2QFormerModel - forward

Blip2Model

autodoc Blip2Model - forward - get_text_features - get_image_features - get_qformer_features

Blip2ForConditionalGeneration

autodoc Blip2ForConditionalGeneration - forward - generate

Blip2ForImageTextRetrieval

autodoc Blip2ForImageTextRetrieval - forward

Blip2TextModelWithProjection

autodoc Blip2TextModelWithProjection

Blip2VisionModelWithProjection

autodoc Blip2VisionModelWithProjection