mirror of

https://github.com/huggingface/transformers.git

synced 2025-07-05 05:40:05 +06:00

* add * add * add * Add deepspeed.md * Add * add * Update docs/source/ja/main_classes/callback.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Update docs/source/ja/main_classes/output.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Update docs/source/ja/main_classes/pipelines.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Update docs/source/ja/main_classes/processors.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Update docs/source/ja/main_classes/processors.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Update docs/source/ja/main_classes/text_generation.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Update docs/source/ja/main_classes/processors.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Update logging.md * Update toctree.yml * Update docs/source/ja/main_classes/deepspeed.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Add suggesitons * m * Update docs/source/ja/main_classes/trainer.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Update toctree.yml * Update Quantization.md * Update docs/source/ja/_toctree.yml Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Update toctree.yml * Update docs/source/en/main_classes/deepspeed.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> * Update docs/source/en/main_classes/deepspeed.md Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com> --------- Co-authored-by: Steven Liu <59462357+stevhliu@users.noreply.github.com>

2.4 KiB

2.4 KiB

Optimization

.optimization モジュールは以下を提供します。

- モデルの微調整に使用できる重み減衰が修正されたオプティマイザー、および

_LRScheduleから継承するスケジュール オブジェクトの形式のいくつかのスケジュール:- 複数のバッチの勾配を累積するための勾配累積クラス

AdamW (PyTorch)

autodoc AdamW

AdaFactor (PyTorch)

autodoc Adafactor

AdamWeightDecay (TensorFlow)

autodoc AdamWeightDecay

autodoc create_optimizer

Schedules

Learning Rate Schedules (Pytorch)

autodoc SchedulerType

autodoc get_scheduler

autodoc get_constant_schedule

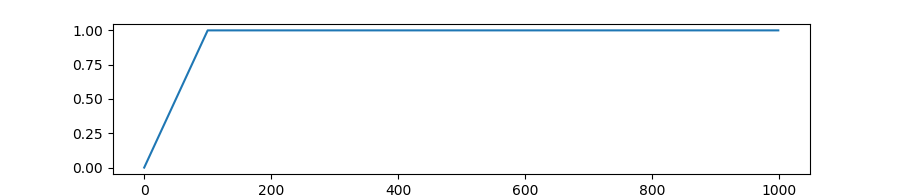

autodoc get_constant_schedule_with_warmup

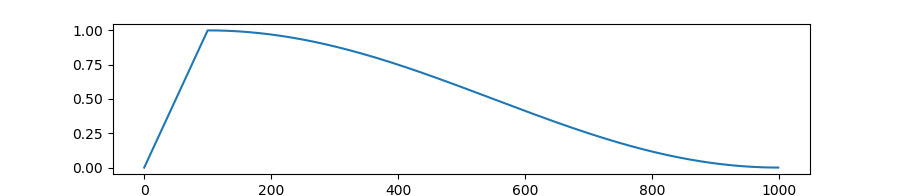

autodoc get_cosine_schedule_with_warmup

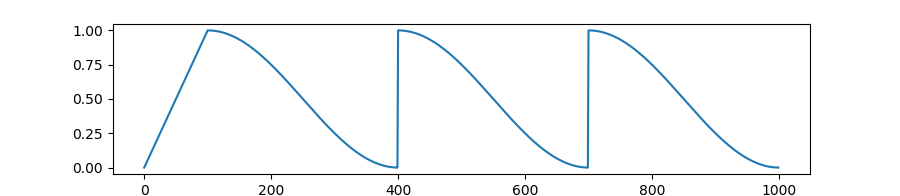

autodoc get_cosine_with_hard_restarts_schedule_with_warmup

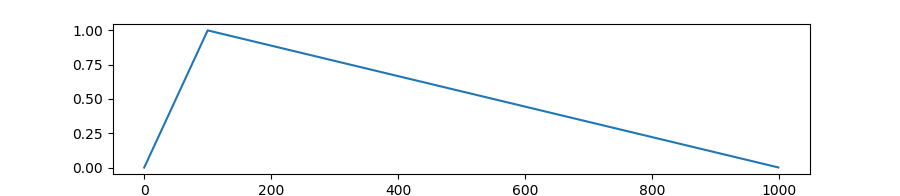

autodoc get_linear_schedule_with_warmup

autodoc get_polynomial_decay_schedule_with_warmup

autodoc get_inverse_sqrt_schedule

Warmup (TensorFlow)

autodoc WarmUp

Gradient Strategies

GradientAccumulator (TensorFlow)

autodoc GradientAccumulator