* Add conversion script * Add NougatImageProcessor * Add crop margin * More improvements * Add docs, READMEs * Remove print statements * Include model_max_length * Add NougatTokenizerFast * Fix imports * Improve postprocessing * Improve image processor * Fix image processor * Improve normalize method * More improvements * More improvements * Add processor, improve docs * Simplify fast tokenizer * Remove test file * Fix docstrings * Use NougatProcessor in conversion script * Add is_levensthein_available * Add tokenizer tests * More improvements * Use numpy instead of opencv * Add is_cv2_available * Fix cv2_available * Add is_nltk_available * Add image processor tests, improve crop_margin * Add integration tests * Improve integration test * Use do_rescale instead of hacks, thanks Amy * Remove random_padding * Address comments * Address more comments * Add import * Address more comments * Address more comments * Address comment * Address comment * Set max_model_input_sizes * Add tests * Add requires_backends * Add Nougat to exotic tests * Use to_pil_image * Address comment regarding nltk * Add NLTK * Improve variable names, integration test * Add test * refactor, document, and test regexes * remove named capture groups, add comments * format * add non-markdown fixed tokenization * format * correct flakyness of args parse * add regex comments * test functionalities for crop_image, align long axis and expected output * add regex tests * remove cv2 dependency * test crop_margin equality between cv2 and python * refactor table regexes to markdown add newline * change print to log, improve doc * fix high count tables correction * address PR comments: naming, linting, asserts * Address comments * Add copied from * Update conversion script * Update conversion script to convert both small and base versions * Add inference example * Add more info * Fix style * Add require annotators to test * Define all keyword arguments explicitly * Move cv2 annotator * Add tokenizer init method * Transfer checkpoints * Add reference to Donut * Address comments * Skip test * Remove cv2 method * Add copied from statements * Use cached_property * Fix docstring * Add file to not doctested --------- Co-authored-by: Pablo Montalvo <pablo.montalvo.leroux@gmail.com>

5.2 KiB

Nougat

Overview

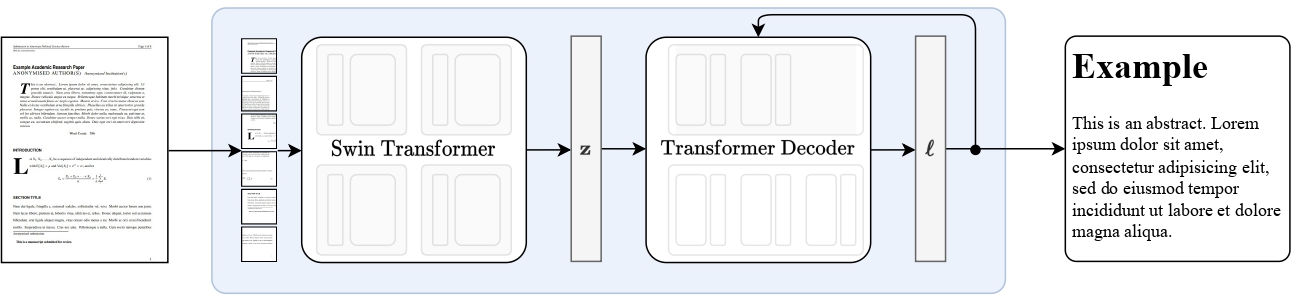

The Nougat model was proposed in Nougat: Neural Optical Understanding for Academic Documents by Lukas Blecher, Guillem Cucurull, Thomas Scialom, Robert Stojnic. Nougat uses the same architecture as Donut, meaning an image Transformer encoder and an autoregressive text Transformer decoder to translate scientific PDFs to markdown, enabling easier access to them.

The abstract from the paper is the following:

Scientific knowledge is predominantly stored in books and scientific journals, often in the form of PDFs. However, the PDF format leads to a loss of semantic information, particularly for mathematical expressions. We propose Nougat (Neural Optical Understanding for Academic Documents), a Visual Transformer model that performs an Optical Character Recognition (OCR) task for processing scientific documents into a markup language, and demonstrate the effectiveness of our model on a new dataset of scientific documents. The proposed approach offers a promising solution to enhance the accessibility of scientific knowledge in the digital age, by bridging the gap between human-readable documents and machine-readable text. We release the models and code to accelerate future work on scientific text recognition.

Nougat high-level overview. Taken from the original paper.

This model was contributed by nielsr. The original code can be found here.

Tips:

- The quickest way to get started with Nougat is by checking the tutorial notebooks, which show how to use the model at inference time as well as fine-tuning on custom data.

- Nougat is always used within the VisionEncoderDecoder framework. The model is identical to Donut in terms of architecture.

Inference

Nougat's [VisionEncoderDecoder] model accepts images as input and makes use of

[~generation.GenerationMixin.generate] to autoregressively generate text given the input image.

The [NougatImageProcessor] class is responsible for preprocessing the input image and

[NougatTokenizerFast] decodes the generated target tokens to the target string. The

[NougatProcessor] wraps [NougatImageProcessor] and [NougatTokenizerFast] classes

into a single instance to both extract the input features and decode the predicted token ids.

- Step-by-step PDF transcription

>>> from huggingface_hub import hf_hub_download

>>> import re

>>> from PIL import Image

>>> from transformers import NougatProcessor, VisionEncoderDecoderModel

>>> from datasets import load_dataset

>>> import torch

>>> processor = NougatProcessor.from_pretrained("facebook/nougat-base")

>>> model = VisionEncoderDecoderModel.from_pretrained("facebook/nougat-base")

>>> device = "cuda" if torch.cuda.is_available() else "cpu"

>>> model.to(device) # doctest: +IGNORE_RESULT

>>> # prepare PDF image for the model

>>> filepath = hf_hub_download(repo_id="hf-internal-testing/fixtures_docvqa", filename="nougat_paper.png", repo_type="dataset")

>>> image = Image.open(filepath)

>>> pixel_values = processor(image, return_tensors="pt").pixel_values

>>> # generate transcription (here we only generate 30 tokens)

>>> outputs = model.generate(

... pixel_values.to(device),

... min_length=1,

... max_new_tokens=30,

... bad_words_ids=[[processor.tokenizer.unk_token_id]],

... )

>>> sequence = processor.batch_decode(outputs, skip_special_tokens=True)[0]

>>> sequence = processor.post_process_generation(sequence, fix_markdown=False)

>>> # note: we're using repr here such for the sake of printing the \n characters, feel free to just print the sequence

>>> print(repr(sequence))

'\n\n# Nougat: Neural Optical Understanding for Academic Documents\n\n Lukas Blecher\n\nCorrespondence to: lblecher@'

See the model hub to look for Nougat checkpoints.

NougatImageProcessor

autodoc NougatImageProcessor - preprocess

NougatTokenizerFast

autodoc NougatTokenizerFast

NougatProcessor

autodoc NougatProcessor - call - from_pretrained - save_pretrained - batch_decode - decode - post_process_generation