mirror of

https://github.com/huggingface/transformers.git

synced 2025-07-03 12:50:06 +06:00

Add FastSpeech2Conformer (#23439)

* start - docs, SpeechT5 copy and rename * add relevant code from FastSpeech2 draft, have tests pass * make it an actual conformer, demo ex. * matching inference with original repo, includes debug code * refactor nn.Sequentials, start more desc. var names * more renaming * more renaming * vocoder scratchwork * matching vocoder outputs * hifigan vocoder conversion script * convert model script, rename some config vars * replace postnet with speecht5's implementation * passing common tests, file cleanup * expand testing, add output hidden states and attention * tokenizer + passing tokenizer tests * variety of updates and tests * g2p_en pckg setup * import structure edits * docstrings and cleanup * repo consistency * deps * small cleanup * forward signature param order * address comments except for masks and labels * address comments on attention_mask and labels * address second round of comments * remove old unneeded line * address comments part 1 * address comments pt 2 * rename auto mapping * fixes for failing tests * address comments part 3 (bart-like, train loss) * make style * pass config where possible * add forward method + tests to WithHifiGan model * make style * address arg passing and generate_speech comments * address Arthur comments * address Arthur comments pt2 * lint changes * Sanchit comment * add g2p-en to doctest deps * move up self.encoder * onnx compatible tensor method * fix is symbolic * fix paper url * move models to espnet org * make style * make fix-copies * update docstring * Arthur comments * update docstring w/ new updates * add model architecture images * header size * md wording update * make style

This commit is contained in:

parent

6eba901d88

commit

d83ff5eeff

@ -515,6 +515,7 @@ doc_test_job = CircleCIJob(

|

||||

"pip install -U --upgrade-strategy eager -e git+https://github.com/huggingface/accelerate@main#egg=accelerate",

|

||||

"pip install --upgrade --upgrade-strategy eager pytest pytest-sugar",

|

||||

"pip install -U --upgrade-strategy eager natten",

|

||||

"pip install -U --upgrade-strategy eager g2p-en",

|

||||

"find -name __pycache__ -delete",

|

||||

"find . -name \*.pyc -delete",

|

||||

# Add an empty file to keep the test step running correctly even no file is selected to be tested.

|

||||

|

||||

@ -358,6 +358,7 @@ Current number of checkpoints: ** (from Baidu) released with the paper [ERNIE-M: Enhanced Multilingual Representation by Aligning Cross-lingual Semantics with Monolingual Corpora](https://arxiv.org/abs/2012.15674) by Xuan Ouyang, Shuohuan Wang, Chao Pang, Yu Sun, Hao Tian, Hua Wu, Haifeng Wang.

|

||||

1. **[ESM](https://huggingface.co/docs/transformers/model_doc/esm)** (from Meta AI) are transformer protein language models. **ESM-1b** was released with the paper [Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences](https://www.pnas.org/content/118/15/e2016239118) by Alexander Rives, Joshua Meier, Tom Sercu, Siddharth Goyal, Zeming Lin, Jason Liu, Demi Guo, Myle Ott, C. Lawrence Zitnick, Jerry Ma, and Rob Fergus. **ESM-1v** was released with the paper [Language models enable zero-shot prediction of the effects of mutations on protein function](https://doi.org/10.1101/2021.07.09.450648) by Joshua Meier, Roshan Rao, Robert Verkuil, Jason Liu, Tom Sercu and Alexander Rives. **ESM-2 and ESMFold** were released with the paper [Language models of protein sequences at the scale of evolution enable accurate structure prediction](https://doi.org/10.1101/2022.07.20.500902) by Zeming Lin, Halil Akin, Roshan Rao, Brian Hie, Zhongkai Zhu, Wenting Lu, Allan dos Santos Costa, Maryam Fazel-Zarandi, Tom Sercu, Sal Candido, Alexander Rives.

|

||||

1. **[Falcon](https://huggingface.co/docs/transformers/model_doc/falcon)** (from Technology Innovation Institute) by Almazrouei, Ebtesam and Alobeidli, Hamza and Alshamsi, Abdulaziz and Cappelli, Alessandro and Cojocaru, Ruxandra and Debbah, Merouane and Goffinet, Etienne and Heslow, Daniel and Launay, Julien and Malartic, Quentin and Noune, Badreddine and Pannier, Baptiste and Penedo, Guilherme.

|

||||

1. **[FastSpeech2Conformer](model_doc/fastspeech2_conformer)** (from ESPnet) released with the paper [Recent Developments On Espnet Toolkit Boosted By Conformer](https://arxiv.org/abs/2010.13956) by Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang.

|

||||

1. **[FLAN-T5](https://huggingface.co/docs/transformers/model_doc/flan-t5)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-t5-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FLAN-UL2](https://huggingface.co/docs/transformers/model_doc/flan-ul2)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-ul2-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FlauBERT](https://huggingface.co/docs/transformers/model_doc/flaubert)** (from CNRS) released with the paper [FlauBERT: Unsupervised Language Model Pre-training for French](https://arxiv.org/abs/1912.05372) by Hang Le, Loïc Vial, Jibril Frej, Vincent Segonne, Maximin Coavoux, Benjamin Lecouteux, Alexandre Allauzen, Benoît Crabbé, Laurent Besacier, Didier Schwab.

|

||||

|

||||

@ -333,6 +333,7 @@ Número actual de puntos de control: ** (from Baidu) released with the paper [ERNIE-M: Enhanced Multilingual Representation by Aligning Cross-lingual Semantics with Monolingual Corpora](https://arxiv.org/abs/2012.15674) by Xuan Ouyang, Shuohuan Wang, Chao Pang, Yu Sun, Hao Tian, Hua Wu, Haifeng Wang.

|

||||

1. **[ESM](https://huggingface.co/docs/transformers/model_doc/esm)** (from Meta AI) are transformer protein language models. **ESM-1b** was released with the paper [Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences](https://www.pnas.org/content/118/15/e2016239118) by Alexander Rives, Joshua Meier, Tom Sercu, Siddharth Goyal, Zeming Lin, Jason Liu, Demi Guo, Myle Ott, C. Lawrence Zitnick, Jerry Ma, and Rob Fergus. **ESM-1v** was released with the paper [Language models enable zero-shot prediction of the effects of mutations on protein function](https://doi.org/10.1101/2021.07.09.450648) by Joshua Meier, Roshan Rao, Robert Verkuil, Jason Liu, Tom Sercu and Alexander Rives. **ESM-2** was released with the paper [Language models of protein sequences at the scale of evolution enable accurate structure prediction](https://doi.org/10.1101/2022.07.20.500902) by Zeming Lin, Halil Akin, Roshan Rao, Brian Hie, Zhongkai Zhu, Wenting Lu, Allan dos Santos Costa, Maryam Fazel-Zarandi, Tom Sercu, Sal Candido, Alexander Rives.

|

||||

1. **[Falcon](https://huggingface.co/docs/transformers/model_doc/falcon)** (from Technology Innovation Institute) by Almazrouei, Ebtesam and Alobeidli, Hamza and Alshamsi, Abdulaziz and Cappelli, Alessandro and Cojocaru, Ruxandra and Debbah, Merouane and Goffinet, Etienne and Heslow, Daniel and Launay, Julien and Malartic, Quentin and Noune, Badreddine and Pannier, Baptiste and Penedo, Guilherme.

|

||||

1. **[FastSpeech2Conformer](model_doc/fastspeech2_conformer)** (from ESPnet) released with the paper [Recent Developments On Espnet Toolkit Boosted By Conformer](https://arxiv.org/abs/2010.13956) by Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang.

|

||||

1. **[FLAN-T5](https://huggingface.co/docs/transformers/model_doc/flan-t5)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-t5-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FLAN-UL2](https://huggingface.co/docs/transformers/model_doc/flan-ul2)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-ul2-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FlauBERT](https://huggingface.co/docs/transformers/model_doc/flaubert)** (from CNRS) released with the paper [FlauBERT: Unsupervised Language Model Pre-training for French](https://arxiv.org/abs/1912.05372) by Hang Le, Loïc Vial, Jibril Frej, Vincent Segonne, Maximin Coavoux, Benjamin Lecouteux, Alexandre Allauzen, Benoît Crabbé, Laurent Besacier, Didier Schwab.

|

||||

|

||||

@ -307,6 +307,7 @@ conda install -c huggingface transformers

|

||||

1. **[ErnieM](https://huggingface.co/docs/transformers/model_doc/ernie_m)** (Baidu से) Xuan Ouyang, Shuohuan Wang, Chao Pang, Yu Sun, Hao Tian, Hua Wu, Haifeng Wang. द्वाराअनुसंधान पत्र [ERNIE-M: Enhanced Multilingual Representation by Aligning Cross-lingual Semantics with Monolingual Corpora](https://arxiv.org/abs/2012.15674) के साथ जारी किया गया

|

||||

1. **[ESM](https://huggingface.co/docs/transformers/model_doc/esm)** (मेटा AI से) ट्रांसफॉर्मर प्रोटीन भाषा मॉडल हैं। **ESM-1b** पेपर के साथ जारी किया गया था [ अलेक्जेंडर राइव्स, जोशुआ मेयर, टॉम सर्कु, सिद्धार्थ गोयल, ज़ेमिंग लिन द्वारा जैविक संरचना और कार्य असुरक्षित सीखने को 250 मिलियन प्रोटीन अनुक्रमों तक स्केल करने से उभरता है] (https://www.pnas.org/content/118/15/e2016239118) जेसन लियू, डेमी गुओ, मायल ओट, सी. लॉरेंस ज़िटनिक, जेरी मा और रॉब फर्गस। **ESM-1v** को पेपर के साथ जारी किया गया था [भाषा मॉडल प्रोटीन फ़ंक्शन पर उत्परिवर्तन के प्रभावों की शून्य-शॉट भविष्यवाणी को सक्षम करते हैं] (https://doi.org/10.1101/2021.07.09.450648) जोशुआ मेयर, रोशन राव, रॉबर्ट वेरकुइल, जेसन लियू, टॉम सर्कु और अलेक्जेंडर राइव्स द्वारा। **ESM-2** को पेपर के साथ जारी किया गया था [भाषा मॉडल विकास के पैमाने पर प्रोटीन अनुक्रम सटीक संरचना भविष्यवाणी को सक्षम करते हैं](https://doi.org/10.1101/2022.07.20.500902) ज़ेमिंग लिन, हलील अकिन, रोशन राव, ब्रायन ही, झोंगकाई झू, वेंटिंग लू, ए द्वारा लान डॉस सैंटोस कोस्टा, मरियम फ़ज़ल-ज़रंडी, टॉम सर्कू, साल कैंडिडो, अलेक्जेंडर राइव्स।

|

||||

1. **[Falcon](https://huggingface.co/docs/transformers/model_doc/falcon)** (from Technology Innovation Institute) by Almazrouei, Ebtesam and Alobeidli, Hamza and Alshamsi, Abdulaziz and Cappelli, Alessandro and Cojocaru, Ruxandra and Debbah, Merouane and Goffinet, Etienne and Heslow, Daniel and Launay, Julien and Malartic, Quentin and Noune, Badreddine and Pannier, Baptiste and Penedo, Guilherme.

|

||||

1. **[FastSpeech2Conformer](model_doc/fastspeech2_conformer)** (ESPnet and Microsoft Research से) Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang. द्वाराअनुसंधान पत्र [Fastspeech 2: Fast And High-quality End-to-End Text To Speech](https://arxiv.org/pdf/2006.04558.pdf) के साथ जारी किया गया

|

||||

1. **[FLAN-T5](https://huggingface.co/docs/transformers/model_doc/flan-t5)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-t5-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FLAN-UL2](https://huggingface.co/docs/transformers/model_doc/flan-ul2)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-ul2-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FlauBERT](https://huggingface.co/docs/transformers/model_doc/flaubert)** (CNRS से) साथ वाला पेपर [FlauBERT: Unsupervised Language Model Pre-training for फ़्रेंच](https://arxiv .org/abs/1912.05372) Hang Le, Loïc Vial, Jibril Frej, Vincent Segonne, Maximin Coavoux, बेंजामिन लेकोउटेक्स, अलेक्जेंड्रे अल्लाउज़ेन, बेनोइट क्रैबे, लॉरेंट बेसेसियर, डिडिएर श्वाब द्वारा।

|

||||

|

||||

@ -367,6 +367,7 @@ Flax、PyTorch、TensorFlowをcondaでインストールする方法は、それ

|

||||

1. **[ErnieM](https://huggingface.co/docs/transformers/model_doc/ernie_m)** (Baidu から) Xuan Ouyang, Shuohuan Wang, Chao Pang, Yu Sun, Hao Tian, Hua Wu, Haifeng Wang. から公開された研究論文 [ERNIE-M: Enhanced Multilingual Representation by Aligning Cross-lingual Semantics with Monolingual Corpora](https://arxiv.org/abs/2012.15674)

|

||||

1. **[ESM](https://huggingface.co/docs/transformers/model_doc/esm)** (Meta AI から) はトランスフォーマープロテイン言語モデルです. **ESM-1b** は Alexander Rives, Joshua Meier, Tom Sercu, Siddharth Goyal, Zeming Lin, Jason Liu, Demi Guo, Myle Ott, C. Lawrence Zitnick, Jerry Ma, and Rob Fergus から公開された研究論文: [Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences](https://www.pnas.org/content/118/15/e2016239118). **ESM-1v** は Joshua Meier, Roshan Rao, Robert Verkuil, Jason Liu, Tom Sercu and Alexander Rives から公開された研究論文: [Language models enable zero-shot prediction of the effects of mutations on protein function](https://doi.org/10.1101/2021.07.09.450648). **ESM-2** と **ESMFold** は Zeming Lin, Halil Akin, Roshan Rao, Brian Hie, Zhongkai Zhu, Wenting Lu, Allan dos Santos Costa, Maryam Fazel-Zarandi, Tom Sercu, Sal Candido, Alexander Rives から公開された研究論文: [Language models of protein sequences at the scale of evolution enable accurate structure prediction](https://doi.org/10.1101/2022.07.20.500902)

|

||||

1. **[Falcon](https://huggingface.co/docs/transformers/model_doc/falcon)** (from Technology Innovation Institute) by Almazrouei, Ebtesam and Alobeidli, Hamza and Alshamsi, Abdulaziz and Cappelli, Alessandro and Cojocaru, Ruxandra and Debbah, Merouane and Goffinet, Etienne and Heslow, Daniel and Launay, Julien and Malartic, Quentin and Noune, Badreddine and Pannier, Baptiste and Penedo, Guilherme.

|

||||

1. **[FastSpeech2Conformer](model_doc/fastspeech2_conformer)** (ESPnet and Microsoft Research から) Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang. から公開された研究論文 [Fastspeech 2: Fast And High-quality End-to-End Text To Speech](https://arxiv.org/pdf/2006.04558.pdf)

|

||||

1. **[FLAN-T5](https://huggingface.co/docs/transformers/model_doc/flan-t5)** (Google AI から) Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V から公開されたレポジトリー [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-t5-checkpoints) Le, and Jason Wei

|

||||

1. **[FLAN-UL2](https://huggingface.co/docs/transformers/model_doc/flan-ul2)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-ul2-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FlauBERT](https://huggingface.co/docs/transformers/model_doc/flaubert)** (CNRS から) Hang Le, Loïc Vial, Jibril Frej, Vincent Segonne, Maximin Coavoux, Benjamin Lecouteux, Alexandre Allauzen, Benoît Crabbé, Laurent Besacier, Didier Schwab から公開された研究論文: [FlauBERT: Unsupervised Language Model Pre-training for French](https://arxiv.org/abs/1912.05372)

|

||||

|

||||

@ -282,6 +282,7 @@ Flax, PyTorch, TensorFlow 설치 페이지에서 이들을 conda로 설치하는

|

||||

1. **[ErnieM](https://huggingface.co/docs/transformers/model_doc/ernie_m)** (Baidu 에서 제공)은 Xuan Ouyang, Shuohuan Wang, Chao Pang, Yu Sun, Hao Tian, Hua Wu, Haifeng Wang.의 [ERNIE-M: Enhanced Multilingual Representation by Aligning Cross-lingual Semantics with Monolingual Corpora](https://arxiv.org/abs/2012.15674)논문과 함께 발표했습니다.

|

||||

1. **[ESM](https://huggingface.co/docs/transformers/model_doc/esm)** (from Meta AI) are transformer protein language models. **ESM-1b** was released with the paper [Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences](https://www.pnas.org/content/118/15/e2016239118) by Alexander Rives, Joshua Meier, Tom Sercu, Siddharth Goyal, Zeming Lin, Jason Liu, Demi Guo, Myle Ott, C. Lawrence Zitnick, Jerry Ma, and Rob Fergus. **ESM-1v** was released with the paper [Language models enable zero-shot prediction of the effects of mutations on protein function](https://doi.org/10.1101/2021.07.09.450648) by Joshua Meier, Roshan Rao, Robert Verkuil, Jason Liu, Tom Sercu and Alexander Rives. **ESM-2** was released with the paper [Language models of protein sequences at the scale of evolution enable accurate structure prediction](https://doi.org/10.1101/2022.07.20.500902) by Zeming Lin, Halil Akin, Roshan Rao, Brian Hie, Zhongkai Zhu, Wenting Lu, Allan dos Santos Costa, Maryam Fazel-Zarandi, Tom Sercu, Sal Candido, Alexander Rives.

|

||||

1. **[Falcon](https://huggingface.co/docs/transformers/model_doc/falcon)** (from Technology Innovation Institute) by Almazrouei, Ebtesam and Alobeidli, Hamza and Alshamsi, Abdulaziz and Cappelli, Alessandro and Cojocaru, Ruxandra and Debbah, Merouane and Goffinet, Etienne and Heslow, Daniel and Launay, Julien and Malartic, Quentin and Noune, Badreddine and Pannier, Baptiste and Penedo, Guilherme.

|

||||

1. **[FastSpeech2Conformer](model_doc/fastspeech2_conformer)** (ESPnet and Microsoft Research 에서 제공)은 Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang.의 [Fastspeech 2: Fast And High-quality End-to-End Text To Speech](https://arxiv.org/pdf/2006.04558.pdf)논문과 함께 발표했습니다.

|

||||

1. **[FLAN-T5](https://huggingface.co/docs/transformers/model_doc/flan-t5)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-t5-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FLAN-UL2](https://huggingface.co/docs/transformers/model_doc/flan-ul2)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-ul2-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FlauBERT](https://huggingface.co/docs/transformers/model_doc/flaubert)** (from CNRS) released with the paper [FlauBERT: Unsupervised Language Model Pre-training for French](https://arxiv.org/abs/1912.05372) by Hang Le, Loïc Vial, Jibril Frej, Vincent Segonne, Maximin Coavoux, Benjamin Lecouteux, Alexandre Allauzen, Benoît Crabbé, Laurent Besacier, Didier Schwab.

|

||||

|

||||

@ -306,6 +306,7 @@ conda install -c huggingface transformers

|

||||

1. **[ErnieM](https://huggingface.co/docs/transformers/model_doc/ernie_m)** (来自 Baidu) 伴随论文 [ERNIE-M: Enhanced Multilingual Representation by Aligning Cross-lingual Semantics with Monolingual Corpora](https://arxiv.org/abs/2012.15674) 由 Xuan Ouyang, Shuohuan Wang, Chao Pang, Yu Sun, Hao Tian, Hua Wu, Haifeng Wang 发布。

|

||||

1. **[ESM](https://huggingface.co/docs/transformers/model_doc/esm)** (from Meta AI) are transformer protein language models. **ESM-1b** was released with the paper [Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences](https://www.pnas.org/content/118/15/e2016239118) by Alexander Rives, Joshua Meier, Tom Sercu, Siddharth Goyal, Zeming Lin, Jason Liu, Demi Guo, Myle Ott, C. Lawrence Zitnick, Jerry Ma, and Rob Fergus. **ESM-1v** was released with the paper [Language models enable zero-shot prediction of the effects of mutations on protein function](https://doi.org/10.1101/2021.07.09.450648) by Joshua Meier, Roshan Rao, Robert Verkuil, Jason Liu, Tom Sercu and Alexander Rives. **ESM-2** was released with the paper [Language models of protein sequences at the scale of evolution enable accurate structure prediction](https://doi.org/10.1101/2022.07.20.500902) by Zeming Lin, Halil Akin, Roshan Rao, Brian Hie, Zhongkai Zhu, Wenting Lu, Allan dos Santos Costa, Maryam Fazel-Zarandi, Tom Sercu, Sal Candido, Alexander Rives.

|

||||

1. **[Falcon](https://huggingface.co/docs/transformers/model_doc/falcon)** (from Technology Innovation Institute) by Almazrouei, Ebtesam and Alobeidli, Hamza and Alshamsi, Abdulaziz and Cappelli, Alessandro and Cojocaru, Ruxandra and Debbah, Merouane and Goffinet, Etienne and Heslow, Daniel and Launay, Julien and Malartic, Quentin and Noune, Badreddine and Pannier, Baptiste and Penedo, Guilherme.

|

||||

1. **[FastSpeech2Conformer](model_doc/fastspeech2_conformer)** (来自 ESPnet and Microsoft Research) 伴随论文 [Fastspeech 2: Fast And High-quality End-to-End Text To Speech](https://arxiv.org/pdf/2006.04558.pdf) 由 Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang 发布。

|

||||

1. **[FLAN-T5](https://huggingface.co/docs/transformers/model_doc/flan-t5)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-t5-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FLAN-UL2](https://huggingface.co/docs/transformers/model_doc/flan-ul2)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-ul2-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FlauBERT](https://huggingface.co/docs/transformers/model_doc/flaubert)** (来自 CNRS) 伴随论文 [FlauBERT: Unsupervised Language Model Pre-training for French](https://arxiv.org/abs/1912.05372) 由 Hang Le, Loïc Vial, Jibril Frej, Vincent Segonne, Maximin Coavoux, Benjamin Lecouteux, Alexandre Allauzen, Benoît Crabbé, Laurent Besacier, Didier Schwab 发布。

|

||||

|

||||

@ -318,6 +318,7 @@ conda install -c huggingface transformers

|

||||

1. **[ErnieM](https://huggingface.co/docs/transformers/model_doc/ernie_m)** (from Baidu) released with the paper [ERNIE-M: Enhanced Multilingual Representation by Aligning Cross-lingual Semantics with Monolingual Corpora](https://arxiv.org/abs/2012.15674) by Xuan Ouyang, Shuohuan Wang, Chao Pang, Yu Sun, Hao Tian, Hua Wu, Haifeng Wang.

|

||||

1. **[ESM](https://huggingface.co/docs/transformers/model_doc/esm)** (from Meta AI) are transformer protein language models. **ESM-1b** was released with the paper [Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences](https://www.pnas.org/content/118/15/e2016239118) by Alexander Rives, Joshua Meier, Tom Sercu, Siddharth Goyal, Zeming Lin, Jason Liu, Demi Guo, Myle Ott, C. Lawrence Zitnick, Jerry Ma, and Rob Fergus. **ESM-1v** was released with the paper [Language models enable zero-shot prediction of the effects of mutations on protein function](https://doi.org/10.1101/2021.07.09.450648) by Joshua Meier, Roshan Rao, Robert Verkuil, Jason Liu, Tom Sercu and Alexander Rives. **ESM-2** was released with the paper [Language models of protein sequences at the scale of evolution enable accurate structure prediction](https://doi.org/10.1101/2022.07.20.500902) by Zeming Lin, Halil Akin, Roshan Rao, Brian Hie, Zhongkai Zhu, Wenting Lu, Allan dos Santos Costa, Maryam Fazel-Zarandi, Tom Sercu, Sal Candido, Alexander Rives.

|

||||

1. **[Falcon](https://huggingface.co/docs/transformers/model_doc/falcon)** (from Technology Innovation Institute) by Almazrouei, Ebtesam and Alobeidli, Hamza and Alshamsi, Abdulaziz and Cappelli, Alessandro and Cojocaru, Ruxandra and Debbah, Merouane and Goffinet, Etienne and Heslow, Daniel and Launay, Julien and Malartic, Quentin and Noune, Badreddine and Pannier, Baptiste and Penedo, Guilherme.

|

||||

1. **[FastSpeech2Conformer](model_doc/fastspeech2_conformer)** (from ESPnet and Microsoft Research) released with the paper [Fastspeech 2: Fast And High-quality End-to-End Text To Speech](https://arxiv.org/pdf/2006.04558.pdf) by Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang.

|

||||

1. **[FLAN-T5](https://huggingface.co/docs/transformers/model_doc/flan-t5)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-t5-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FLAN-UL2](https://huggingface.co/docs/transformers/model_doc/flan-ul2)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-ul2-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FlauBERT](https://huggingface.co/docs/transformers/model_doc/flaubert)** (from CNRS) released with the paper [FlauBERT: Unsupervised Language Model Pre-training for French](https://arxiv.org/abs/1912.05372) by Hang Le, Loïc Vial, Jibril Frej, Vincent Segonne, Maximin Coavoux, Benjamin Lecouteux, Alexandre Allauzen, Benoît Crabbé, Laurent Besacier, Didier Schwab.

|

||||

|

||||

@ -332,6 +332,8 @@

|

||||

title: ESM

|

||||

- local: model_doc/falcon

|

||||

title: Falcon

|

||||

- local: model_doc/fastspeech2_conformer

|

||||

title: FastSpeech2Conformer

|

||||

- local: model_doc/flan-t5

|

||||

title: FLAN-T5

|

||||

- local: model_doc/flan-ul2

|

||||

|

||||

@ -132,6 +132,7 @@ Flax), PyTorch, and/or TensorFlow.

|

||||

| [ESM](model_doc/esm) | ✅ | ✅ | ❌ |

|

||||

| [FairSeq Machine-Translation](model_doc/fsmt) | ✅ | ❌ | ❌ |

|

||||

| [Falcon](model_doc/falcon) | ✅ | ❌ | ❌ |

|

||||

| [FastSpeech2Conformer](model_doc/fastspeech2_conformer) | ✅ | ❌ | ❌ |

|

||||

| [FLAN-T5](model_doc/flan-t5) | ✅ | ✅ | ✅ |

|

||||

| [FLAN-UL2](model_doc/flan-ul2) | ✅ | ✅ | ✅ |

|

||||

| [FlauBERT](model_doc/flaubert) | ✅ | ✅ | ❌ |

|

||||

|

||||

134

docs/source/en/model_doc/fastspeech2_conformer.md

Normal file

134

docs/source/en/model_doc/fastspeech2_conformer.md

Normal file

@ -0,0 +1,134 @@

|

||||

<!--Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

|

||||

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

|

||||

specific language governing permissions and limitations under the License.

|

||||

-->

|

||||

|

||||

# FastSpeech2Conformer

|

||||

|

||||

## Overview

|

||||

|

||||

The FastSpeech2Conformer model was proposed with the paper [Recent Developments On Espnet Toolkit Boosted By Conformer](https://arxiv.org/abs/2010.13956) by Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang.

|

||||

|

||||

The abstract from the original FastSpeech2 paper is the following:

|

||||

|

||||

*Non-autoregressive text to speech (TTS) models such as FastSpeech (Ren et al., 2019) can synthesize speech significantly faster than previous autoregressive models with comparable quality. The training of FastSpeech model relies on an autoregressive teacher model for duration prediction (to provide more information as input) and knowledge distillation (to simplify the data distribution in output), which can ease the one-to-many mapping problem (i.e., multiple speech variations correspond to the same text) in TTS. However, FastSpeech has several disadvantages: 1) the teacher-student distillation pipeline is complicated and time-consuming, 2) the duration extracted from the teacher model is not accurate enough, and the target mel-spectrograms distilled from teacher model suffer from information loss due to data simplification, both of which limit the voice quality. In this paper, we propose FastSpeech 2, which addresses the issues in FastSpeech and better solves the one-to-many mapping problem in TTS by 1) directly training the model with ground-truth target instead of the simplified output from teacher, and 2) introducing more variation information of speech (e.g., pitch, energy and more accurate duration) as conditional inputs. Specifically, we extract duration, pitch and energy from speech waveform and directly take them as conditional inputs in training and use predicted values in inference. We further design FastSpeech 2s, which is the first attempt to directly generate speech waveform from text in parallel, enjoying the benefit of fully end-to-end inference. Experimental results show that 1) FastSpeech 2 achieves a 3x training speed-up over FastSpeech, and FastSpeech 2s enjoys even faster inference speed; 2) FastSpeech 2 and 2s outperform FastSpeech in voice quality, and FastSpeech 2 can even surpass autoregressive models. Audio samples are available at https://speechresearch.github.io/fastspeech2/.*

|

||||

|

||||

This model was contributed by [Connor Henderson](https://huggingface.co/connor-henderson). The original code can be found [here](https://github.com/espnet/espnet/blob/master/espnet2/tts/fastspeech2/fastspeech2.py).

|

||||

|

||||

|

||||

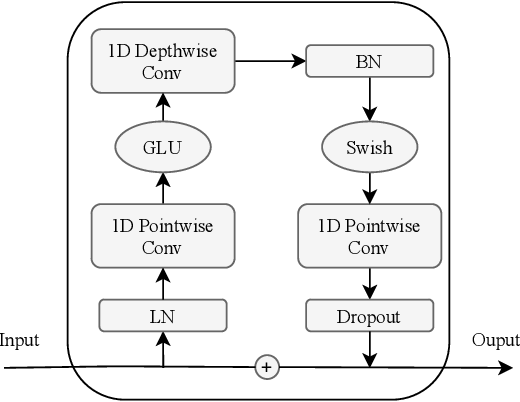

## 🤗 Model Architecture

|

||||

FastSpeech2's general structure with a Mel-spectrogram decoder was implemented, and the traditional transformer blocks were replaced with with conformer blocks as done in the ESPnet library.

|

||||

|

||||

#### FastSpeech2 Model Architecture

|

||||

|

||||

|

||||

#### Conformer Blocks

|

||||

|

||||

|

||||

#### Convolution Module

|

||||

|

||||

|

||||

## 🤗 Transformers Usage

|

||||

|

||||

You can run FastSpeech2Conformer locally with the 🤗 Transformers library.

|

||||

|

||||

1. First install the 🤗 [Transformers library](https://github.com/huggingface/transformers), g2p-en:

|

||||

|

||||

```

|

||||

pip install --upgrade pip

|

||||

pip install --upgrade transformers g2p-en

|

||||

```

|

||||

|

||||

2. Run inference via the Transformers modelling code with the model and hifigan separately

|

||||

|

||||

```python

|

||||

|

||||

from transformers import FastSpeech2ConformerTokenizer, FastSpeech2ConformerModel, FastSpeech2ConformerHifiGan

|

||||

import soundfile as sf

|

||||

|

||||

tokenizer = FastSpeech2ConformerTokenizer.from_pretrained("espnet/fastspeech2_conformer")

|

||||

inputs = tokenizer("Hello, my dog is cute.", return_tensors="pt")

|

||||

input_ids = inputs["input_ids"]

|

||||

|

||||

model = FastSpeech2ConformerModel.from_pretrained("espnet/fastspeech2_conformer")

|

||||

output_dict = model(input_ids, return_dict=True)

|

||||

spectrogram = output_dict["spectrogram"]

|

||||

|

||||

hifigan = FastSpeech2ConformerHifiGan.from_pretrained("espnet/fastspeech2_conformer_hifigan")

|

||||

waveform = hifigan(spectrogram)

|

||||

|

||||

sf.write("speech.wav", waveform.squeeze().detach().numpy(), samplerate=22050)

|

||||

```

|

||||

|

||||

3. Run inference via the Transformers modelling code with the model and hifigan combined

|

||||

|

||||

```python

|

||||

from transformers import FastSpeech2ConformerTokenizer, FastSpeech2ConformerWithHifiGan

|

||||

import soundfile as sf

|

||||

|

||||

tokenizer = FastSpeech2ConformerTokenizer.from_pretrained("espnet/fastspeech2_conformer")

|

||||

inputs = tokenizer("Hello, my dog is cute.", return_tensors="pt")

|

||||

input_ids = inputs["input_ids"]

|

||||

|

||||

model = FastSpeech2ConformerWithHifiGan.from_pretrained("espnet/fastspeech2_conformer_with_hifigan")

|

||||

output_dict = model(input_ids, return_dict=True)

|

||||

waveform = output_dict["waveform"]

|

||||

|

||||

sf.write("speech.wav", waveform.squeeze().detach().numpy(), samplerate=22050)

|

||||

```

|

||||

|

||||

4. Run inference with a pipeline and specify which vocoder to use

|

||||

```python

|

||||

from transformers import pipeline, FastSpeech2ConformerHifiGan

|

||||

import soundfile as sf

|

||||

|

||||

vocoder = FastSpeech2ConformerHifiGan.from_pretrained("espnet/fastspeech2_conformer_hifigan")

|

||||

synthesiser = pipeline(model="espnet/fastspeech2_conformer", vocoder=vocoder)

|

||||

|

||||

speech = synthesiser("Hello, my dog is cooler than you!")

|

||||

|

||||

sf.write("speech.wav", speech["audio"].squeeze(), samplerate=speech["sampling_rate"])

|

||||

```

|

||||

|

||||

|

||||

## FastSpeech2ConformerConfig

|

||||

|

||||

[[autodoc]] FastSpeech2ConformerConfig

|

||||

|

||||

## FastSpeech2ConformerHifiGanConfig

|

||||

|

||||

[[autodoc]] FastSpeech2ConformerHifiGanConfig

|

||||

|

||||

## FastSpeech2ConformerWithHifiGanConfig

|

||||

|

||||

[[autodoc]] FastSpeech2ConformerWithHifiGanConfig

|

||||

|

||||

## FastSpeech2ConformerTokenizer

|

||||

|

||||

[[autodoc]] FastSpeech2ConformerTokenizer

|

||||

- __call__

|

||||

- save_vocabulary

|

||||

- decode

|

||||

- batch_decode

|

||||

|

||||

## FastSpeech2ConformerModel

|

||||

|

||||

[[autodoc]] FastSpeech2ConformerModel

|

||||

- forward

|

||||

|

||||

## FastSpeech2ConformerHifiGan

|

||||

|

||||

[[autodoc]] FastSpeech2ConformerHifiGan

|

||||

- forward

|

||||

|

||||

## FastSpeech2ConformerWithHifiGan

|

||||

|

||||

[[autodoc]] FastSpeech2ConformerWithHifiGan

|

||||

- forward

|

||||

@ -44,10 +44,8 @@ Here's a code snippet you can use to listen to the resulting audio in a notebook

|

||||

For more examples on what Bark and other pretrained TTS models can do, refer to our

|

||||

[Audio course](https://huggingface.co/learn/audio-course/chapter6/pre-trained_models).

|

||||

|

||||

If you are looking to fine-tune a TTS model, you can currently fine-tune SpeechT5 only. SpeechT5 is pre-trained on a combination of

|

||||

speech-to-text and text-to-speech data, allowing it to learn a unified space of hidden representations shared by both text

|

||||

and speech. This means that the same pre-trained model can be fine-tuned for different tasks. Furthermore, SpeechT5

|

||||

supports multiple speakers through x-vector speaker embeddings.

|

||||

If you are looking to fine-tune a TTS model, the only text-to-speech models currently available in 🤗 Transformers

|

||||

are [SpeechT5](model_doc/speecht5) and [FastSpeech2Conformer](model_doc/fastspeech2_conformer), though more will be added in the future. SpeechT5 is pre-trained on a combination of speech-to-text and text-to-speech data, allowing it to learn a unified space of hidden representations shared by both text and speech. This means that the same pre-trained model can be fine-tuned for different tasks. Furthermore, SpeechT5 supports multiple speakers through x-vector speaker embeddings.

|

||||

|

||||

The remainder of this guide illustrates how to:

|

||||

|

||||

|

||||

@ -108,6 +108,7 @@ La documentation est organisée en 5 parties:

|

||||

1. **[EncoderDecoder](model_doc/encoder-decoder)** (from Google Research) released with the paper [Leveraging Pre-trained Checkpoints for Sequence Generation Tasks](https://arxiv.org/abs/1907.12461) by Sascha Rothe, Shashi Narayan, Aliaksei Severyn.

|

||||

1. **[ERNIE](model_doc/ernie)** (from Baidu) released with the paper [ERNIE: Enhanced Representation through Knowledge Integration](https://arxiv.org/abs/1904.09223) by Yu Sun, Shuohuan Wang, Yukun Li, Shikun Feng, Xuyi Chen, Han Zhang, Xin Tian, Danxiang Zhu, Hao Tian, Hua Wu.

|

||||

1. **[ESM](model_doc/esm)** (from Meta AI) are transformer protein language models. **ESM-1b** was released with the paper [Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences](https://www.pnas.org/content/118/15/e2016239118) by Alexander Rives, Joshua Meier, Tom Sercu, Siddharth Goyal, Zeming Lin, Jason Liu, Demi Guo, Myle Ott, C. Lawrence Zitnick, Jerry Ma, and Rob Fergus. **ESM-1v** was released with the paper [Language models enable zero-shot prediction of the effects of mutations on protein function](https://doi.org/10.1101/2021.07.09.450648) by Joshua Meier, Roshan Rao, Robert Verkuil, Jason Liu, Tom Sercu and Alexander Rives. **ESM-2 and ESMFold** were released with the paper [Language models of protein sequences at the scale of evolution enable accurate structure prediction](https://doi.org/10.1101/2022.07.20.500902) by Zeming Lin, Halil Akin, Roshan Rao, Brian Hie, Zhongkai Zhu, Wenting Lu, Allan dos Santos Costa, Maryam Fazel-Zarandi, Tom Sercu, Sal Candido, Alexander Rives.

|

||||

1. **[FastSpeech2Conformer](model_doc/fastspeech2_conformer)** (from ESPnet) released with the paper [Recent Developments On Espnet Toolkit Boosted By Conformer](https://arxiv.org/abs/2010.13956) by Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang.

|

||||

1. **[FLAN-T5](model_doc/flan-t5)** (from Google AI) released in the repository [google-research/t5x](https://github.com/google-research/t5x/blob/main/docs/models.md#flan-t5-checkpoints) by Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei

|

||||

1. **[FlauBERT](model_doc/flaubert)** (from CNRS) released with the paper [FlauBERT: Unsupervised Language Model Pre-training for French](https://arxiv.org/abs/1912.05372) by Hang Le, Loïc Vial, Jibril Frej, Vincent Segonne, Maximin Coavoux, Benjamin Lecouteux, Alexandre Allauzen, Benoît Crabbé, Laurent Besacier, Didier Schwab.

|

||||

1. **[FLAVA](model_doc/flava)** (from Facebook AI) released with the paper [FLAVA: A Foundational Language And Vision Alignment Model](https://arxiv.org/abs/2112.04482) by Amanpreet Singh, Ronghang Hu, Vedanuj Goswami, Guillaume Couairon, Wojciech Galuba, Marcus Rohrbach, and Douwe Kiela.

|

||||

@ -290,6 +291,7 @@ Le tableau ci-dessous représente la prise en charge actuelle dans la bibliothè

|

||||

| ERNIE | ❌ | ❌ | ✅ | ❌ | ❌ |

|

||||

| ESM | ✅ | ❌ | ✅ | ✅ | ❌ |

|

||||

| FairSeq Machine-Translation | ✅ | ❌ | ✅ | ❌ | ❌ |

|

||||

| FastSpeech2Conformer | ✅ | ❌ | ✅ | ❌ | ❌ |

|

||||

| FlauBERT | ✅ | ❌ | ✅ | ✅ | ❌ |

|

||||

| FLAVA | ❌ | ❌ | ✅ | ❌ | ❌ |

|

||||

| FNet | ✅ | ✅ | ✅ | ❌ | ❌ |

|

||||

|

||||

@ -30,6 +30,7 @@ from .utils import (

|

||||

is_bitsandbytes_available,

|

||||

is_essentia_available,

|

||||

is_flax_available,

|

||||

is_g2p_en_available,

|

||||

is_keras_nlp_available,

|

||||

is_librosa_available,

|

||||

is_pretty_midi_available,

|

||||

@ -423,11 +424,16 @@ _import_structure = {

|

||||

"models.ernie_m": ["ERNIE_M_PRETRAINED_CONFIG_ARCHIVE_MAP", "ErnieMConfig"],

|

||||

"models.esm": ["ESM_PRETRAINED_CONFIG_ARCHIVE_MAP", "EsmConfig", "EsmTokenizer"],

|

||||

"models.falcon": ["FALCON_PRETRAINED_CONFIG_ARCHIVE_MAP", "FalconConfig"],

|

||||

"models.flaubert": [

|

||||

"FLAUBERT_PRETRAINED_CONFIG_ARCHIVE_MAP",

|

||||

"FlaubertConfig",

|

||||

"FlaubertTokenizer",

|

||||

"models.fastspeech2_conformer": [

|

||||

"FASTSPEECH2_CONFORMER_HIFIGAN_PRETRAINED_CONFIG_ARCHIVE_MAP",

|

||||

"FASTSPEECH2_CONFORMER_PRETRAINED_CONFIG_ARCHIVE_MAP",

|

||||

"FASTSPEECH2_CONFORMER_WITH_HIFIGAN_PRETRAINED_CONFIG_ARCHIVE_MAP",

|

||||

"FastSpeech2ConformerConfig",

|

||||

"FastSpeech2ConformerHifiGanConfig",

|

||||

"FastSpeech2ConformerTokenizer",

|

||||

"FastSpeech2ConformerWithHifiGanConfig",

|

||||

],

|

||||

"models.flaubert": ["FLAUBERT_PRETRAINED_CONFIG_ARCHIVE_MAP", "FlaubertConfig", "FlaubertTokenizer"],

|

||||

"models.flava": [

|

||||

"FLAVA_PRETRAINED_CONFIG_ARCHIVE_MAP",

|

||||

"FlavaConfig",

|

||||

@ -2126,6 +2132,15 @@ else:

|

||||

"FalconPreTrainedModel",

|

||||

]

|

||||

)

|

||||

_import_structure["models.fastspeech2_conformer"].extend(

|

||||

[

|

||||

"FASTSPEECH2_CONFORMER_PRETRAINED_MODEL_ARCHIVE_LIST",

|

||||

"FastSpeech2ConformerHifiGan",

|

||||

"FastSpeech2ConformerModel",

|

||||

"FastSpeech2ConformerPreTrainedModel",

|

||||

"FastSpeech2ConformerWithHifiGan",

|

||||

]

|

||||

)

|

||||

_import_structure["models.flaubert"].extend(

|

||||

[

|

||||

"FLAUBERT_PRETRAINED_MODEL_ARCHIVE_LIST",

|

||||

@ -5081,11 +5096,16 @@ if TYPE_CHECKING:

|

||||

from .models.ernie_m import ERNIE_M_PRETRAINED_CONFIG_ARCHIVE_MAP, ErnieMConfig

|

||||

from .models.esm import ESM_PRETRAINED_CONFIG_ARCHIVE_MAP, EsmConfig, EsmTokenizer

|

||||

from .models.falcon import FALCON_PRETRAINED_CONFIG_ARCHIVE_MAP, FalconConfig

|

||||

from .models.flaubert import (

|

||||

FLAUBERT_PRETRAINED_CONFIG_ARCHIVE_MAP,

|

||||

FlaubertConfig,

|

||||

FlaubertTokenizer,

|

||||

from .models.fastspeech2_conformer import (

|

||||

FASTSPEECH2_CONFORMER_HIFIGAN_PRETRAINED_CONFIG_ARCHIVE_MAP,

|

||||

FASTSPEECH2_CONFORMER_PRETRAINED_CONFIG_ARCHIVE_MAP,

|

||||

FASTSPEECH2_CONFORMER_WITH_HIFIGAN_PRETRAINED_CONFIG_ARCHIVE_MAP,

|

||||

FastSpeech2ConformerConfig,

|

||||

FastSpeech2ConformerHifiGanConfig,

|

||||

FastSpeech2ConformerTokenizer,

|

||||

FastSpeech2ConformerWithHifiGanConfig,

|

||||

)

|

||||

from .models.flaubert import FLAUBERT_PRETRAINED_CONFIG_ARCHIVE_MAP, FlaubertConfig, FlaubertTokenizer

|

||||

from .models.flava import (

|

||||

FLAVA_PRETRAINED_CONFIG_ARCHIVE_MAP,

|

||||

FlavaConfig,

|

||||

@ -6652,6 +6672,13 @@ if TYPE_CHECKING:

|

||||

FalconModel,

|

||||

FalconPreTrainedModel,

|

||||

)

|

||||

from .models.fastspeech2_conformer import (

|

||||

FASTSPEECH2_CONFORMER_PRETRAINED_MODEL_ARCHIVE_LIST,

|

||||

FastSpeech2ConformerHifiGan,

|

||||

FastSpeech2ConformerModel,

|

||||

FastSpeech2ConformerPreTrainedModel,

|

||||

FastSpeech2ConformerWithHifiGan,

|

||||

)

|

||||

from .models.flaubert import (

|

||||

FLAUBERT_PRETRAINED_MODEL_ARCHIVE_LIST,

|

||||

FlaubertForMultipleChoice,

|

||||

|

||||

@ -84,6 +84,7 @@ from .utils import (

|

||||

is_faiss_available,

|

||||

is_flax_available,

|

||||

is_ftfy_available,

|

||||

is_g2p_en_available,

|

||||

is_in_notebook,

|

||||

is_ipex_available,

|

||||

is_librosa_available,

|

||||

|

||||

@ -83,6 +83,7 @@ from . import (

|

||||

ernie_m,

|

||||

esm,

|

||||

falcon,

|

||||

fastspeech2_conformer,

|

||||

flaubert,

|

||||

flava,

|

||||

fnet,

|

||||

|

||||

@ -93,6 +93,7 @@ CONFIG_MAPPING_NAMES = OrderedDict(

|

||||

("ernie_m", "ErnieMConfig"),

|

||||

("esm", "EsmConfig"),

|

||||

("falcon", "FalconConfig"),

|

||||

("fastspeech2_conformer", "FastSpeech2ConformerConfig"),

|

||||

("flaubert", "FlaubertConfig"),

|

||||

("flava", "FlavaConfig"),

|

||||

("fnet", "FNetConfig"),

|

||||

@ -319,6 +320,7 @@ CONFIG_ARCHIVE_MAP_MAPPING_NAMES = OrderedDict(

|

||||

("ernie_m", "ERNIE_M_PRETRAINED_CONFIG_ARCHIVE_MAP"),

|

||||

("esm", "ESM_PRETRAINED_CONFIG_ARCHIVE_MAP"),

|

||||

("falcon", "FALCON_PRETRAINED_CONFIG_ARCHIVE_MAP"),

|

||||

("fastspeech2_conformer", "FASTSPEECH2_CONFORMER_PRETRAINED_CONFIG_ARCHIVE_MAP"),

|

||||

("flaubert", "FLAUBERT_PRETRAINED_CONFIG_ARCHIVE_MAP"),

|

||||

("flava", "FLAVA_PRETRAINED_CONFIG_ARCHIVE_MAP"),

|

||||

("fnet", "FNET_PRETRAINED_CONFIG_ARCHIVE_MAP"),

|

||||

@ -542,6 +544,7 @@ MODEL_NAMES_MAPPING = OrderedDict(

|

||||

("ernie_m", "ErnieM"),

|

||||

("esm", "ESM"),

|

||||

("falcon", "Falcon"),

|

||||

("fastspeech2_conformer", "FastSpeech2Conformer"),

|

||||

("flan-t5", "FLAN-T5"),

|

||||

("flan-ul2", "FLAN-UL2"),

|

||||

("flaubert", "FlauBERT"),

|

||||

|

||||

@ -95,6 +95,7 @@ MODEL_MAPPING_NAMES = OrderedDict(

|

||||

("ernie_m", "ErnieMModel"),

|

||||

("esm", "EsmModel"),

|

||||

("falcon", "FalconModel"),

|

||||

("fastspeech2_conformer", "FastSpeech2ConformerModel"),

|

||||

("flaubert", "FlaubertModel"),

|

||||

("flava", "FlavaModel"),

|

||||

("fnet", "FNetModel"),

|

||||

@ -1075,6 +1076,7 @@ MODEL_FOR_AUDIO_XVECTOR_MAPPING_NAMES = OrderedDict(

|

||||

MODEL_FOR_TEXT_TO_SPECTROGRAM_MAPPING_NAMES = OrderedDict(

|

||||

[

|

||||

# Model for Text-To-Spectrogram mapping

|

||||

("fastspeech2_conformer", "FastSpeech2ConformerModel"),

|

||||

("speecht5", "SpeechT5ForTextToSpeech"),

|

||||

]

|

||||

)

|

||||

@ -1083,6 +1085,7 @@ MODEL_FOR_TEXT_TO_WAVEFORM_MAPPING_NAMES = OrderedDict(

|

||||

[

|

||||

# Model for Text-To-Waveform mapping

|

||||

("bark", "BarkModel"),

|

||||

("fastspeech2_conformer", "FastSpeech2ConformerWithHifiGan"),

|

||||

("musicgen", "MusicgenForConditionalGeneration"),

|

||||

("seamless_m4t", "SeamlessM4TForTextToSpeech"),

|

||||

("seamless_m4t_v2", "SeamlessM4Tv2ForTextToSpeech"),

|

||||

|

||||

@ -25,7 +25,14 @@ from ...configuration_utils import PretrainedConfig

|

||||

from ...dynamic_module_utils import get_class_from_dynamic_module, resolve_trust_remote_code

|

||||

from ...tokenization_utils import PreTrainedTokenizer

|

||||

from ...tokenization_utils_base import TOKENIZER_CONFIG_FILE

|

||||

from ...utils import cached_file, extract_commit_hash, is_sentencepiece_available, is_tokenizers_available, logging

|

||||

from ...utils import (

|

||||

cached_file,

|

||||

extract_commit_hash,

|

||||

is_g2p_en_available,

|

||||

is_sentencepiece_available,

|

||||

is_tokenizers_available,

|

||||

logging,

|

||||

)

|

||||

from ..encoder_decoder import EncoderDecoderConfig

|

||||

from .auto_factory import _LazyAutoMapping

|

||||

from .configuration_auto import (

|

||||

@ -163,6 +170,10 @@ else:

|

||||

("ernie_m", ("ErnieMTokenizer" if is_sentencepiece_available() else None, None)),

|

||||

("esm", ("EsmTokenizer", None)),

|

||||

("falcon", (None, "PreTrainedTokenizerFast" if is_tokenizers_available() else None)),

|

||||

(

|

||||

"fastspeech2_conformer",

|

||||

("FastSpeech2ConformerTokenizer" if is_g2p_en_available() else None, None),

|

||||

),

|

||||

("flaubert", ("FlaubertTokenizer", None)),

|

||||

("fnet", ("FNetTokenizer", "FNetTokenizerFast" if is_tokenizers_available() else None)),

|

||||

("fsmt", ("FSMTTokenizer", None)),

|

||||

|

||||

77

src/transformers/models/fastspeech2_conformer/__init__.py

Normal file

77

src/transformers/models/fastspeech2_conformer/__init__.py

Normal file

@ -0,0 +1,77 @@

|

||||

# Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

from typing import TYPE_CHECKING

|

||||

|

||||

from ...utils import (

|

||||

OptionalDependencyNotAvailable,

|

||||

_LazyModule,

|

||||

is_torch_available,

|

||||

)

|

||||

|

||||

|

||||

_import_structure = {

|

||||

"configuration_fastspeech2_conformer": [

|

||||

"FASTSPEECH2_CONFORMER_HIFIGAN_PRETRAINED_CONFIG_ARCHIVE_MAP",

|

||||

"FASTSPEECH2_CONFORMER_PRETRAINED_CONFIG_ARCHIVE_MAP",

|

||||

"FASTSPEECH2_CONFORMER_WITH_HIFIGAN_PRETRAINED_CONFIG_ARCHIVE_MAP",

|

||||

"FastSpeech2ConformerConfig",

|

||||

"FastSpeech2ConformerHifiGanConfig",

|

||||

"FastSpeech2ConformerWithHifiGanConfig",

|

||||

],

|

||||

"tokenization_fastspeech2_conformer": ["FastSpeech2ConformerTokenizer"],

|

||||

}

|

||||

|

||||

try:

|

||||

if not is_torch_available():

|

||||

raise OptionalDependencyNotAvailable()

|

||||

except OptionalDependencyNotAvailable:

|

||||

pass

|

||||

else:

|

||||

_import_structure["modeling_fastspeech2_conformer"] = [

|

||||

"FASTSPEECH2_CONFORMER_PRETRAINED_MODEL_ARCHIVE_LIST",

|

||||

"FastSpeech2ConformerWithHifiGan",

|

||||

"FastSpeech2ConformerHifiGan",

|

||||

"FastSpeech2ConformerModel",

|

||||

"FastSpeech2ConformerPreTrainedModel",

|

||||

]

|

||||

|

||||

if TYPE_CHECKING:

|

||||

from .configuration_fastspeech2_conformer import (

|

||||

FASTSPEECH2_CONFORMER_HIFIGAN_PRETRAINED_CONFIG_ARCHIVE_MAP,

|

||||

FASTSPEECH2_CONFORMER_PRETRAINED_CONFIG_ARCHIVE_MAP,

|

||||

FASTSPEECH2_CONFORMER_WITH_HIFIGAN_PRETRAINED_CONFIG_ARCHIVE_MAP,

|

||||

FastSpeech2ConformerConfig,

|

||||

FastSpeech2ConformerHifiGanConfig,

|

||||

FastSpeech2ConformerWithHifiGanConfig,

|

||||

)

|

||||

from .tokenization_fastspeech2_conformer import FastSpeech2ConformerTokenizer

|

||||

|

||||

try:

|

||||

if not is_torch_available():

|

||||

raise OptionalDependencyNotAvailable()

|

||||

except OptionalDependencyNotAvailable:

|

||||

pass

|

||||

else:

|

||||

from .modeling_fastspeech2_conformer import (

|

||||

FASTSPEECH2_CONFORMER_PRETRAINED_MODEL_ARCHIVE_LIST,

|

||||

FastSpeech2ConformerHifiGan,

|

||||

FastSpeech2ConformerModel,

|

||||

FastSpeech2ConformerPreTrainedModel,

|

||||

FastSpeech2ConformerWithHifiGan,

|

||||

)

|

||||

|

||||

else:

|

||||

import sys

|

||||

|

||||

sys.modules[__name__] = _LazyModule(__name__, globals()["__file__"], _import_structure, module_spec=__spec__)

|

||||

@ -0,0 +1,488 @@

|

||||

# coding=utf-8

|

||||

# Copyright 2023 The HuggingFace Inc. team. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

""" FastSpeech2Conformer model configuration"""

|

||||

|

||||

from typing import Dict

|

||||

|

||||

from ...configuration_utils import PretrainedConfig

|

||||

from ...utils import logging

|

||||

|

||||

|

||||

logger = logging.get_logger(__name__)

|

||||

|

||||

|

||||

FASTSPEECH2_CONFORMER_PRETRAINED_CONFIG_ARCHIVE_MAP = {

|

||||

"espnet/fastspeech2_conformer": "https://huggingface.co/espnet/fastspeech2_conformer/raw/main/config.json",

|

||||

}

|

||||

|

||||

FASTSPEECH2_CONFORMER_HIFIGAN_PRETRAINED_CONFIG_ARCHIVE_MAP = {

|

||||

"espnet/fastspeech2_conformer_hifigan": "https://huggingface.co/espnet/fastspeech2_conformer_hifigan/raw/main/config.json",

|

||||

}

|

||||

|

||||

FASTSPEECH2_CONFORMER_WITH_HIFIGAN_PRETRAINED_CONFIG_ARCHIVE_MAP = {

|

||||

"espnet/fastspeech2_conformer_with_hifigan": "https://huggingface.co/espnet/fastspeech2_conformer_with_hifigan/raw/main/config.json",

|

||||

}

|

||||

|

||||

|

||||

class FastSpeech2ConformerConfig(PretrainedConfig):

|

||||

r"""

|

||||

This is the configuration class to store the configuration of a [`FastSpeech2ConformerModel`]. It is used to

|

||||

instantiate a FastSpeech2Conformer model according to the specified arguments, defining the model architecture.

|

||||

Instantiating a configuration with the defaults will yield a similar configuration to that of the

|

||||

FastSpeech2Conformer [espnet/fastspeech2_conformer](https://huggingface.co/espnet/fastspeech2_conformer)

|

||||

architecture.

|

||||

|

||||

Configuration objects inherit from [`PretrainedConfig`] and can be used to control the model outputs. Read the

|

||||

documentation from [`PretrainedConfig`] for more information.

|

||||

|

||||

Args:

|

||||

hidden_size (`int`, *optional*, defaults to 384):

|

||||

The dimensionality of the hidden layers.

|

||||

vocab_size (`int`, *optional*, defaults to 78):

|

||||

The size of the vocabulary.

|

||||

num_mel_bins (`int`, *optional*, defaults to 80):

|

||||

The number of mel filters used in the filter bank.

|

||||

encoder_num_attention_heads (`int`, *optional*, defaults to 2):

|

||||

The number of attention heads in the encoder.

|

||||

encoder_layers (`int`, *optional*, defaults to 4):

|

||||

The number of layers in the encoder.

|

||||

encoder_linear_units (`int`, *optional*, defaults to 1536):

|

||||

The number of units in the linear layer of the encoder.

|

||||

decoder_layers (`int`, *optional*, defaults to 4):

|

||||

The number of layers in the decoder.

|

||||

decoder_num_attention_heads (`int`, *optional*, defaults to 2):

|

||||

The number of attention heads in the decoder.

|

||||

decoder_linear_units (`int`, *optional*, defaults to 1536):

|

||||

The number of units in the linear layer of the decoder.

|

||||

speech_decoder_postnet_layers (`int`, *optional*, defaults to 5):

|

||||

The number of layers in the post-net of the speech decoder.

|

||||

speech_decoder_postnet_units (`int`, *optional*, defaults to 256):

|

||||

The number of units in the post-net layers of the speech decoder.

|

||||

speech_decoder_postnet_kernel (`int`, *optional*, defaults to 5):

|

||||

The kernel size in the post-net of the speech decoder.

|

||||

positionwise_conv_kernel_size (`int`, *optional*, defaults to 3):

|

||||

The size of the convolution kernel used in the position-wise layer.

|

||||

encoder_normalize_before (`bool`, *optional*, defaults to `False`):

|

||||

Specifies whether to normalize before encoder layers.

|

||||

decoder_normalize_before (`bool`, *optional*, defaults to `False`):

|

||||

Specifies whether to normalize before decoder layers.

|

||||

encoder_concat_after (`bool`, *optional*, defaults to `False`):

|

||||

Specifies whether to concatenate after encoder layers.

|

||||

decoder_concat_after (`bool`, *optional*, defaults to `False`):

|

||||

Specifies whether to concatenate after decoder layers.

|

||||

reduction_factor (`int`, *optional*, defaults to 1):

|

||||

The factor by which the speech frame rate is reduced.

|

||||

speaking_speed (`float`, *optional*, defaults to 1.0):

|

||||

The speed of the speech produced.

|

||||

use_macaron_style_in_conformer (`bool`, *optional*, defaults to `True`):

|

||||

Specifies whether to use macaron style in the conformer.

|

||||

use_cnn_in_conformer (`bool`, *optional*, defaults to `True`):

|

||||

Specifies whether to use convolutional neural networks in the conformer.

|

||||

encoder_kernel_size (`int`, *optional*, defaults to 7):

|

||||

The kernel size used in the encoder.

|

||||

decoder_kernel_size (`int`, *optional*, defaults to 31):

|

||||

The kernel size used in the decoder.

|

||||

duration_predictor_layers (`int`, *optional*, defaults to 2):

|

||||

The number of layers in the duration predictor.

|

||||

duration_predictor_channels (`int`, *optional*, defaults to 256):

|

||||

The number of channels in the duration predictor.

|

||||

duration_predictor_kernel_size (`int`, *optional*, defaults to 3):

|

||||

The kernel size used in the duration predictor.

|

||||

energy_predictor_layers (`int`, *optional*, defaults to 2):

|

||||

The number of layers in the energy predictor.

|

||||

energy_predictor_channels (`int`, *optional*, defaults to 256):

|

||||

The number of channels in the energy predictor.

|

||||

energy_predictor_kernel_size (`int`, *optional*, defaults to 3):

|

||||

The kernel size used in the energy predictor.

|

||||

energy_predictor_dropout (`float`, *optional*, defaults to 0.5):

|

||||

The dropout rate in the energy predictor.

|

||||

energy_embed_kernel_size (`int`, *optional*, defaults to 1):

|

||||

The kernel size used in the energy embed layer.

|

||||

energy_embed_dropout (`float`, *optional*, defaults to 0.0):

|

||||

The dropout rate in the energy embed layer.

|

||||

stop_gradient_from_energy_predictor (`bool`, *optional*, defaults to `False`):

|

||||

Specifies whether to stop gradients from the energy predictor.

|

||||

pitch_predictor_layers (`int`, *optional*, defaults to 5):

|

||||

The number of layers in the pitch predictor.

|

||||

pitch_predictor_channels (`int`, *optional*, defaults to 256):

|

||||

The number of channels in the pitch predictor.

|

||||

pitch_predictor_kernel_size (`int`, *optional*, defaults to 5):

|

||||

The kernel size used in the pitch predictor.

|

||||

pitch_predictor_dropout (`float`, *optional*, defaults to 0.5):

|

||||

The dropout rate in the pitch predictor.

|

||||

pitch_embed_kernel_size (`int`, *optional*, defaults to 1):

|

||||

The kernel size used in the pitch embed layer.

|

||||

pitch_embed_dropout (`float`, *optional*, defaults to 0.0):

|

||||

The dropout rate in the pitch embed layer.

|

||||

stop_gradient_from_pitch_predictor (`bool`, *optional*, defaults to `True`):

|

||||

Specifies whether to stop gradients from the pitch predictor.

|

||||

encoder_dropout_rate (`float`, *optional*, defaults to 0.2):

|

||||

The dropout rate in the encoder.

|

||||

encoder_positional_dropout_rate (`float`, *optional*, defaults to 0.2):

|

||||

The positional dropout rate in the encoder.

|

||||

encoder_attention_dropout_rate (`float`, *optional*, defaults to 0.2):

|

||||

The attention dropout rate in the encoder.

|

||||

decoder_dropout_rate (`float`, *optional*, defaults to 0.2):

|

||||

The dropout rate in the decoder.

|

||||

decoder_positional_dropout_rate (`float`, *optional*, defaults to 0.2):

|

||||

The positional dropout rate in the decoder.

|

||||

decoder_attention_dropout_rate (`float`, *optional*, defaults to 0.2):

|

||||

The attention dropout rate in the decoder.

|

||||

duration_predictor_dropout_rate (`float`, *optional*, defaults to 0.2):

|

||||

The dropout rate in the duration predictor.

|

||||

speech_decoder_postnet_dropout (`float`, *optional*, defaults to 0.5):

|

||||

The dropout rate in the speech decoder postnet.

|

||||

max_source_positions (`int`, *optional*, defaults to 5000):

|

||||

if `"relative"` position embeddings are used, defines the maximum source input positions.

|

||||

use_masking (`bool`, *optional*, defaults to `True`):

|

||||

Specifies whether to use masking in the model.

|

||||

use_weighted_masking (`bool`, *optional*, defaults to `False`):

|

||||

Specifies whether to use weighted masking in the model.

|

||||

num_speakers (`int`, *optional*):

|

||||

Number of speakers. If set to > 1, assume that the speaker ids will be provided as the input and use

|

||||

speaker id embedding layer.

|

||||

num_languages (`int`, *optional*):

|

||||

Number of languages. If set to > 1, assume that the language ids will be provided as the input and use the

|

||||

languge id embedding layer.

|

||||

speaker_embed_dim (`int`, *optional*):

|

||||

Speaker embedding dimension. If set to > 0, assume that speaker_embedding will be provided as the input.

|

||||

is_encoder_decoder (`bool`, *optional*, defaults to `True`):

|

||||

Specifies whether the model is an encoder-decoder.

|

||||

|

||||

Example:

|

||||

|

||||

```python

|

||||

>>> from transformers import FastSpeech2ConformerModel, FastSpeech2ConformerConfig

|

||||

|

||||

>>> # Initializing a FastSpeech2Conformer style configuration

|

||||

>>> configuration = FastSpeech2ConformerConfig()

|

||||

|

||||

>>> # Initializing a model from the FastSpeech2Conformer style configuration

|

||||

>>> model = FastSpeech2ConformerModel(configuration)

|

||||

|

||||

>>> # Accessing the model configuration

|

||||

>>> configuration = model.config

|

||||

```"""

|

||||

|

||||

model_type = "fastspeech2_conformer"

|

||||

attribute_map = {"num_hidden_layers": "encoder_layers", "num_attention_heads": "encoder_num_attention_heads"}

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

hidden_size=384,

|

||||

vocab_size=78,

|

||||

num_mel_bins=80,

|

||||

encoder_num_attention_heads=2,

|

||||

encoder_layers=4,

|

||||

encoder_linear_units=1536,

|

||||

decoder_layers=4,

|

||||

decoder_num_attention_heads=2,

|

||||

decoder_linear_units=1536,

|

||||

speech_decoder_postnet_layers=5,

|

||||

speech_decoder_postnet_units=256,

|

||||

speech_decoder_postnet_kernel=5,

|

||||

positionwise_conv_kernel_size=3,

|

||||

encoder_normalize_before=False,

|

||||

decoder_normalize_before=False,

|

||||

encoder_concat_after=False,

|

||||

decoder_concat_after=False,

|

||||

reduction_factor=1,

|

||||

speaking_speed=1.0,

|

||||

use_macaron_style_in_conformer=True,

|

||||

use_cnn_in_conformer=True,

|

||||

encoder_kernel_size=7,

|

||||

decoder_kernel_size=31,

|

||||

duration_predictor_layers=2,

|

||||

duration_predictor_channels=256,

|

||||

duration_predictor_kernel_size=3,

|

||||

energy_predictor_layers=2,

|

||||

energy_predictor_channels=256,

|

||||

energy_predictor_kernel_size=3,

|

||||

energy_predictor_dropout=0.5,

|

||||

energy_embed_kernel_size=1,

|

||||

energy_embed_dropout=0.0,

|

||||

stop_gradient_from_energy_predictor=False,

|

||||

pitch_predictor_layers=5,

|

||||

pitch_predictor_channels=256,

|

||||

pitch_predictor_kernel_size=5,

|

||||

pitch_predictor_dropout=0.5,

|

||||

pitch_embed_kernel_size=1,

|

||||

pitch_embed_dropout=0.0,

|

||||

stop_gradient_from_pitch_predictor=True,

|

||||

encoder_dropout_rate=0.2,

|

||||